Understanding the Challenges with Adam in Deep Learning

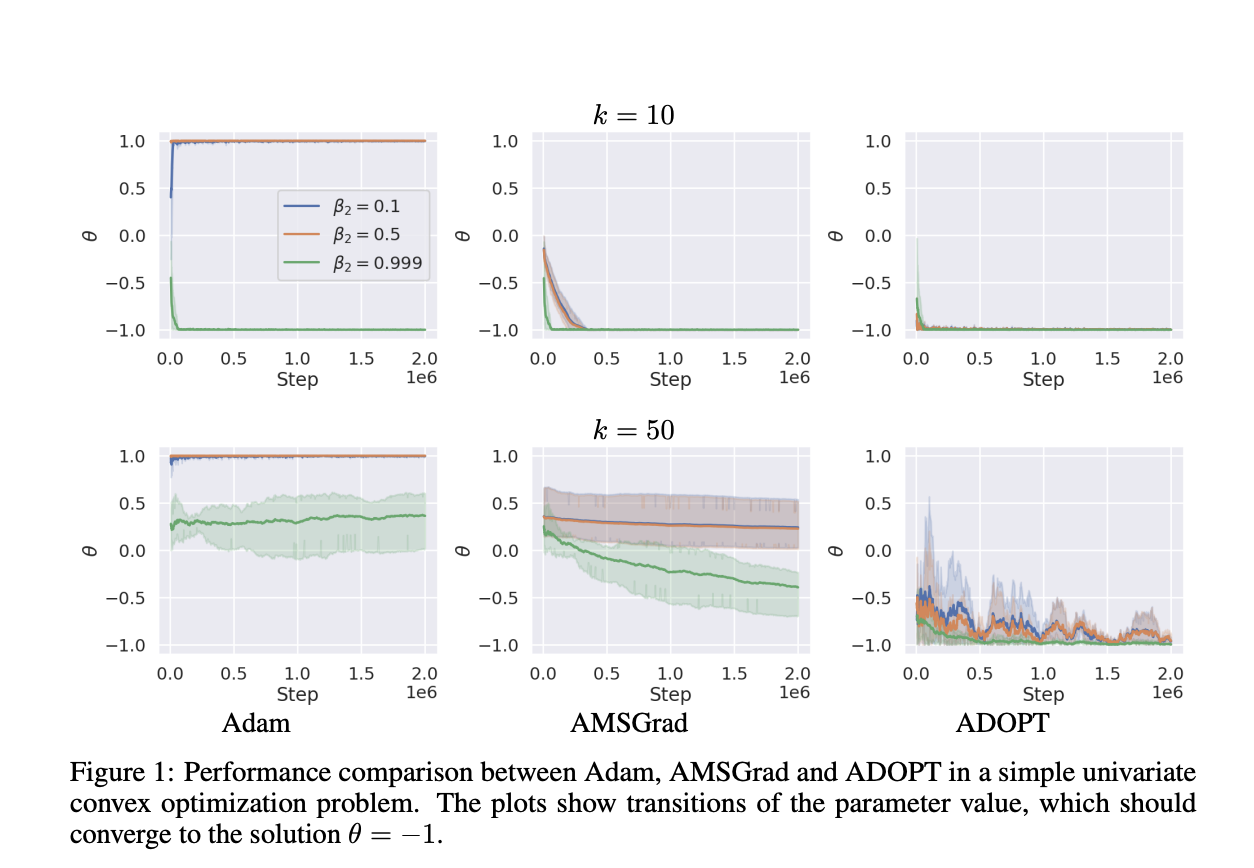

Adam is a popular optimization algorithm in deep learning, but it can struggle to converge unless the hyperparameter β2 is adjusted for each specific problem. Alternative methods like AMSGrad make unrealistic assumptions about gradient noise and may not work well in all scenarios. Other solutions, such as AdaShift, only address convergence in limited situations. Recent findings suggest that fine-tuning β2 for each task can help, but this approach is complex and not universally applicable.

Introducing ADOPT: A New Solution

Researchers from The University of Tokyo developed ADOPT, a new adaptive gradient method that ensures optimal convergence without needing specific hyperparameter choices. ADOPT improves on Adam by:

- Excluding the current gradient from second moment estimates.

- Adjusting the order of momentum and normalization updates.

Experiments show that ADOPT outperforms Adam and its variants across various tasks, including image classification, generative modeling, language processing, and reinforcement learning, especially in challenging scenarios.

Key Advantages of ADOPT

- Reliable Convergence: Works effectively even in high-gradient noise conditions.

- Faster Performance: Achieves quicker and more stable convergence compared to Adam.

- Broad Applicability: Excels in diverse applications, from image classification to language model fine-tuning.

How ADOPT Works

The study focuses on minimizing an objective function using first-order stochastic optimization methods. Instead of the exact gradient, ADOPT uses an estimate called the stochastic gradient. The goal is to find a stationary point where the gradient is zero, even in nonconvex scenarios. Unlike traditional methods, ADOPT does not rely on strict assumptions about gradient noise, making it more flexible and effective.

Real-World Impact and Future Research

ADOPT has been tested in various tasks and has shown superior performance in both toy problems and complex datasets like MNIST and CIFAR-10. It also excels in advanced applications like Swin Transformer-based ImageNet classification and NVAE generative modeling.

This research bridges the gap between theory and practical application in adaptive optimization. Future studies may explore further generalizations of ADOPT to enhance its effectiveness across different contexts.

Stay Connected

Check out the Paper and GitHub. All credit goes to the researchers involved. Follow us on Twitter, join our Telegram Channel, and connect with our LinkedIn Group. If you enjoy our work, subscribe to our newsletter and join our 55k+ ML SubReddit.

Transform Your Business with AI

To stay competitive, consider using ADOPT for reliable convergence without hyperparameter tuning. Here’s how AI can enhance your operations:

- Identify Automation Opportunities: Find key customer interaction points that can benefit from AI.

- Define KPIs: Ensure your AI initiatives have measurable impacts.

- Select AI Solutions: Choose tools that meet your specific needs.

- Implement Gradually: Start with a pilot, gather data, and expand wisely.

For AI KPI management advice, connect with us at hello@itinai.com. For ongoing insights, follow us on Telegram or Twitter.

Explore Our Solutions

Discover how AI can redefine your sales processes and customer engagement. Visit itinai.com for more information.