Transforming Code Generation with AI

Introduction to SelfCodeAlign

Artificial intelligence is changing how we generate code in software engineering. Large language models (LLMs) are now essential for tasks like code synthesis, debugging, and optimization. However, creating these models has challenges, such as the need for high-quality training data, which can be expensive and hard to obtain.

The Challenges of Traditional Methods

Training LLMs often involves human-curated data or proprietary models, which can lead to licensing issues and high costs. Some open-source methods have tried to address these issues but often fall short in performance and transparency. This highlights the need for new solutions that maintain high quality while being open and accessible.

Introducing SelfCodeAlign

A team of researchers has developed a new approach called SelfCodeAlign. This method allows LLMs to train independently, producing high-quality instruction-response pairs without needing human input or proprietary data. It generates instructions by extracting coding concepts from seed data, creating unique tasks, and validating responses in a controlled environment.

How SelfCodeAlign Works

SelfCodeAlign starts by selecting 250,000 high-quality Python functions from a large dataset. It then breaks down these functions into fundamental coding concepts, generates tasks based on these concepts, and produces multiple responses. Only the responses that pass automated tests are used for final tuning, ensuring accuracy and diversity.

Performance and Efficiency

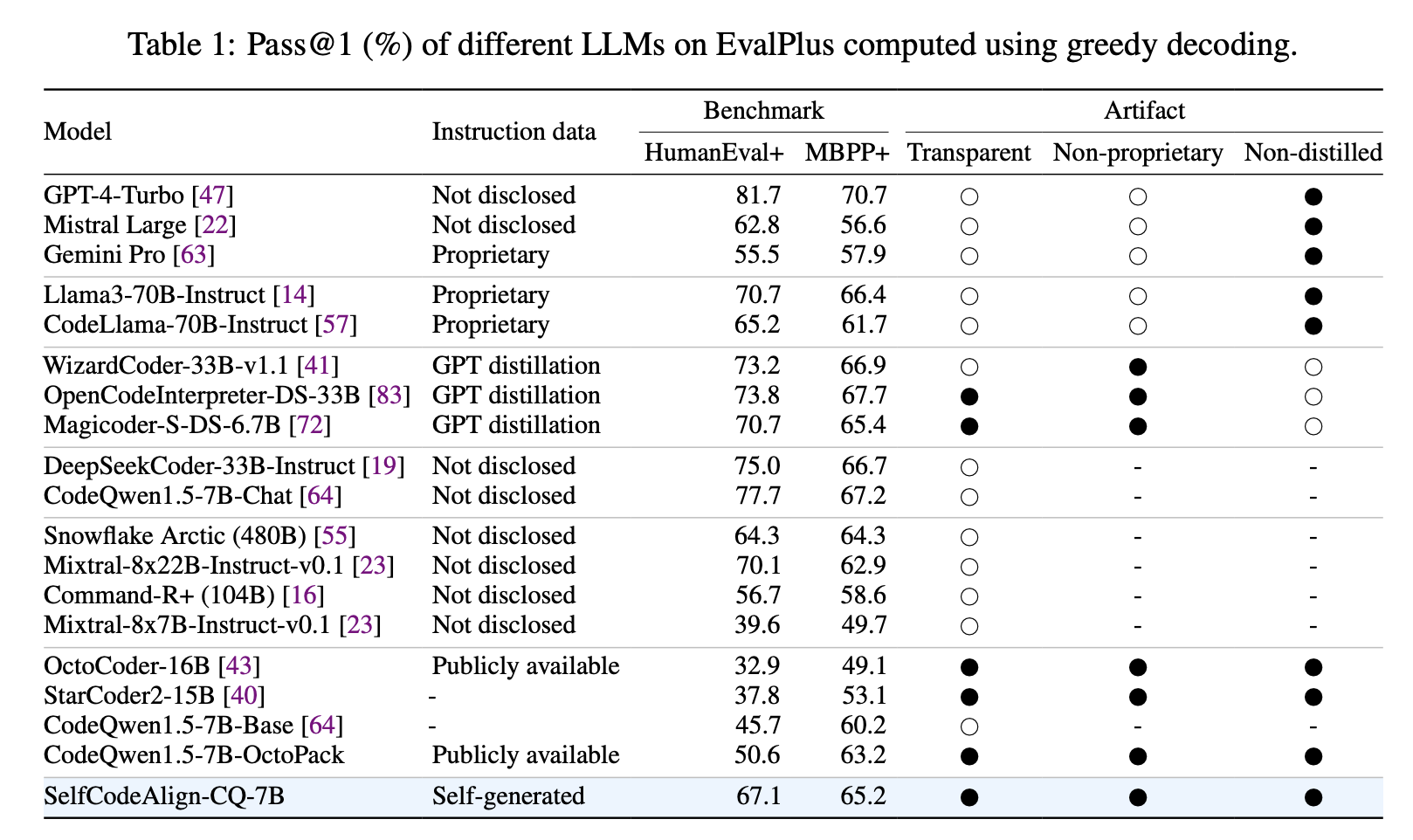

SelfCodeAlign has been tested with the CodeQwen1.5-7B model and has outperformed many existing models, achieving a HumanEval+ pass@1 score of 67.1%. It shows strong performance across various coding tasks and maintains efficiency, matching or exceeding the performance of 79.9% of similar solutions.

Key Benefits of SelfCodeAlign

- Transparency and Accessibility: It is open-source and does not require proprietary data, making it ideal for ethical AI research.

- Efficiency Gains: Smaller, independently trained models can achieve results comparable to larger proprietary models.

- Versatility Across Tasks: It excels in multiple coding tasks, making it useful in various software engineering domains.

- Cost and Licensing Benefits: Operates without costly human-annotated data, making it scalable and economically viable.

- Adaptability for Future Research: Its design can be adapted for use in other technical fields beyond coding.

Conclusion

SelfCodeAlign offers a groundbreaking solution for training code generation models. By eliminating the need for human annotations and proprietary data, it provides a scalable, transparent, and high-performance alternative for developing LLMs. This advancement could reshape the future of open-source AI in coding.

Get Involved

Check out the Paper and GitHub Page. Follow us on Twitter, join our Telegram Channel, and connect with our LinkedIn Group. If you enjoy our work, subscribe to our newsletter and join our community of over 55k on ML SubReddit.

Explore AI Opportunities

To evolve your company with AI and stay competitive, consider using SelfCodeAlign. Identify automation opportunities, define KPIs, select suitable AI solutions, and implement gradually. For AI KPI management advice, contact us at hello@itinai.com. Stay updated on AI insights through our Telegram or @itinaicom.

Redefining Sales and Customer Engagement

Discover how AI can transform your sales processes and customer engagement by exploring solutions at itinai.com.