Introduction to Large Language Models

Large language models (LLMs) are essential for many AI systems, driving progress in natural language processing (NLP), computer vision, and scientific research. However, they have challenges, particularly in size and cost. As the demand for advanced AI grows, so does the need for more efficient models. One promising solution is the Mixture of Experts (MoE) model, which enhances performance by activating specialized components selectively.

Hunyuan-Large: A Game Changer

Tencent has launched Hunyuan-Large, the largest open Transformer-based MoE model in the industry. With 389 billion parameters (52 billion active), it can handle large contexts of up to 256K tokens. This model uses innovative techniques to excel in NLP tasks, often outperforming other top models like LLama3.1-70B and LLama3.1-405B.

Key Features and Advantages

- Massive Data Training: Pre-trained on seven trillion tokens, including diverse synthetic data, making it effective in various fields like math, coding, and languages.

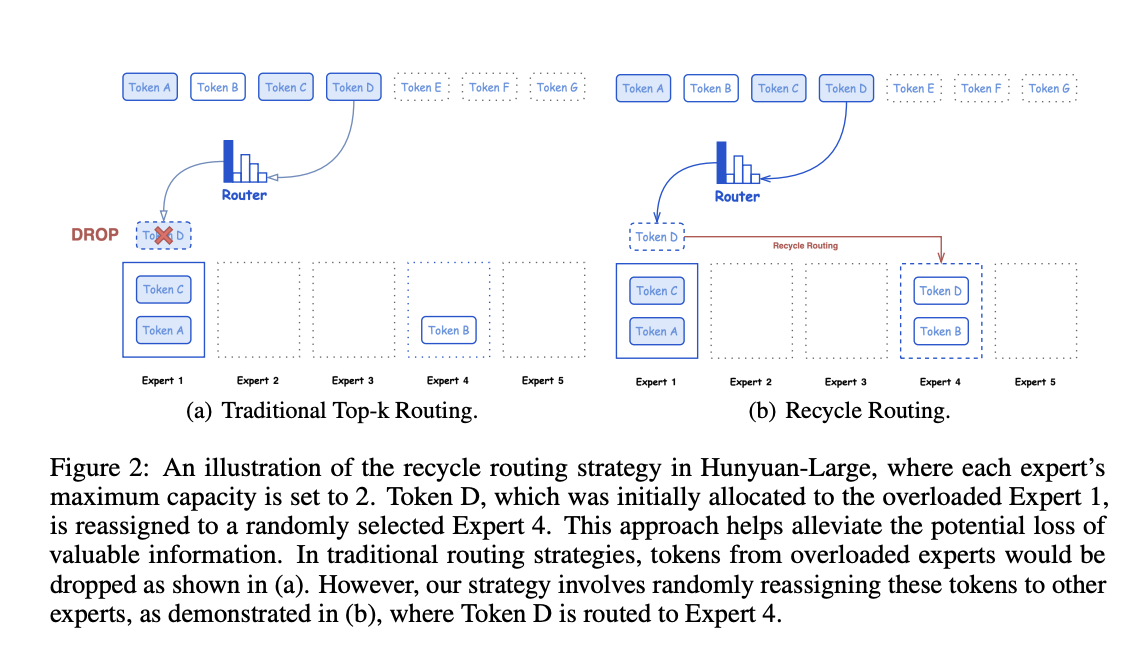

- Efficiency Innovations: Employs mixed expert routing, KV cache compression, and expert-specific learning rates for optimal performance and reduced memory use.

- Open Source Access: Provides an open-source codebase and pre-trained checkpoints for community research and development.

Performance Highlights

Hunyuan-Large outperforms other models in key NLP tasks such as question answering and logical reasoning. For example, it scores 88.4 on the MMLU benchmark, surpassing LLama’s 85.2. This model excels in managing long-context tasks, filling a significant gap in current LLM capabilities.

Conclusion: A Significant Advancement

Tencent’s Hunyuan-Large marks a major milestone in Transformer-based MoE models. With its technical improvements and massive scale, it provides a powerful tool for researchers and industry professionals, paving the way for more accessible and capable AI solutions.

Get Involved

Explore the Paper, Code, and Models. Follow us on Twitter, join our Telegram Channel, and connect with our LinkedIn Group. Sign up for our newsletter and join our 55k+ ML SubReddit for more insights.

AI for Business Growth

Leverage AI to stay competitive: Discover automation opportunities, define KPIs, select suitable AI solutions, and implement them gradually.

For AI KPI management advice, reach us at hello@itinai.com. Stay updated on AI insights via our Telegram or Twitter.

Explore AI solutions for enhancing sales processes and customer engagement at itinai.com.