Challenges in AI Research

The field of AI research faces major challenges due to the high computational power needed for large language and vision models. For example, training the Pythia-1B model requires 64 GPUs for three days, while RoBERTa needs 1,000 GPUs for just one day. This high demand limits academic labs from conducting essential experiments and makes it hard for researchers to plan budgets and allocate resources effectively.

Current Solutions and Limitations

Some efforts have been made to tackle these computational issues, such as:

- Compute Surveys: These explore resource access and environmental impacts but mainly focus on NLP.

- Training Optimization Techniques: These often require specialized knowledge for manual tuning.

- Hardware Recommendations: While helpful, they focus more on throughput than practical training times.

However, these methods do not fully address the need for solutions that are model-agnostic and focused on replication.

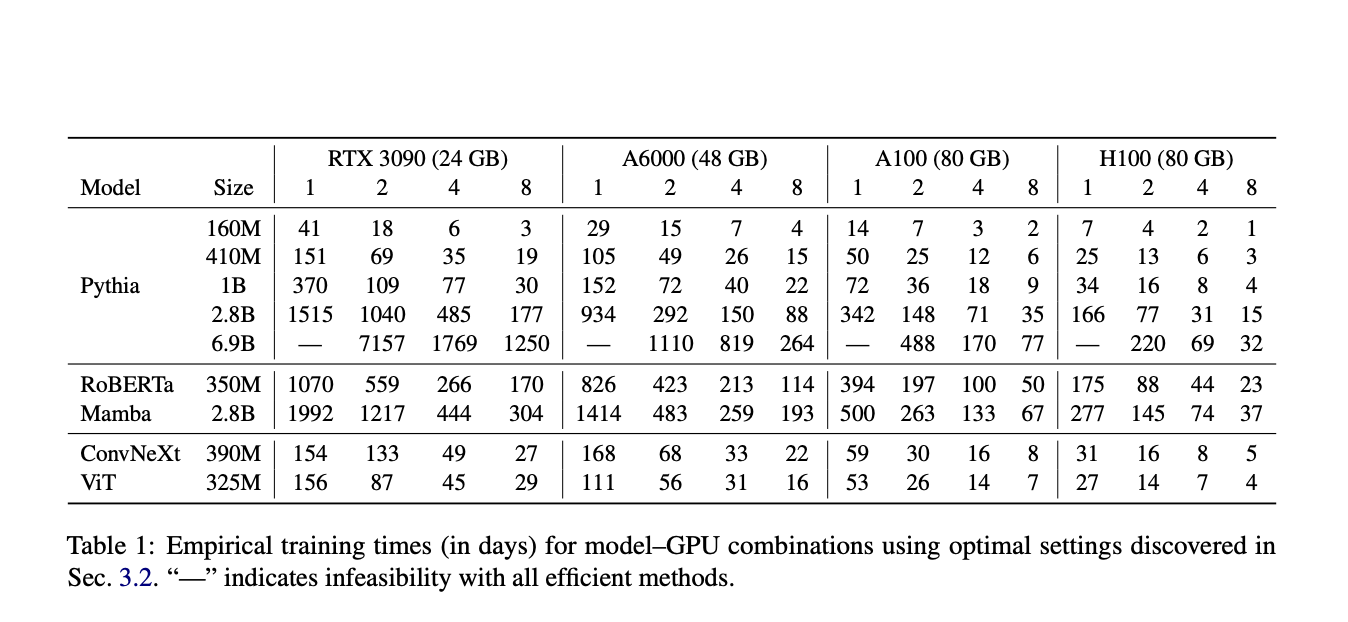

Innovative Approach from Brown University

Researchers from Brown University have developed a new approach to improve pre-training capabilities in academic settings. Their method combines a survey of available computational resources with actual measurements of model training times. They created a benchmark system to evaluate training durations across different GPUs and find the best settings for efficiency.

Key Findings

Through extensive testing, they found significant improvements in resource use. For instance, Pythia-1B can now be trained using fewer GPU days than previously thought.

Optimization Strategies

The proposed method uses two main strategies:

- Free-Lunch Methods: These improve throughput and reduce memory usage without performance loss or user intervention. Examples include model compilation and using custom kernels.

- Memory-Saving Methods: These reduce memory consumption but may involve some performance trade-offs. Key components include activation checkpointing and model sharding.

Results of Optimization

The results showed a 4.3 times speedup in training time, reducing the training of Pythia-1B to just 18 days on the same hardware. Surprisingly, some memory-saving methods improved training time by up to 71% for models with limited GPU memory.

Conclusion

This research marks a significant advancement in helping academic institutions train large models despite limited resources. The developed tools allow researchers to optimize their hardware setups before making large investments, enabling them to conduct preliminary tests on cloud platforms.

Get Involved

Check out the Paper and GitHub. Follow us on Twitter, join our Telegram Channel, and connect with our LinkedIn Group. If you enjoy our work, subscribe to our newsletter and join our 55k+ ML SubReddit.

Explore AI Solutions

To evolve your company with AI, consider the following steps:

- Identify Automation Opportunities: Find customer interaction points that can benefit from AI.

- Define KPIs: Ensure measurable impacts from your AI initiatives.

- Select an AI Solution: Choose tools that fit your needs and allow for customization.

- Implement Gradually: Start small, gather data, and expand wisely.

For AI KPI management advice, contact us at hello@itinai.com. For ongoing insights, follow us on Telegram or Twitter.

Transform Your Sales and Engagement

Discover how AI can enhance your sales processes and customer engagement at itinai.com.