Advancements in AI with GPT-4o and GPT-4o-mini

The large language models GPT-4o and GPT-4o-mini have significantly improved how we process language. They help generate high-quality responses, rewrite documents, and boost productivity in various applications. However, one major issue is latency, which can slow down tasks like updating blog posts or refining code, leading to frustrating delays.

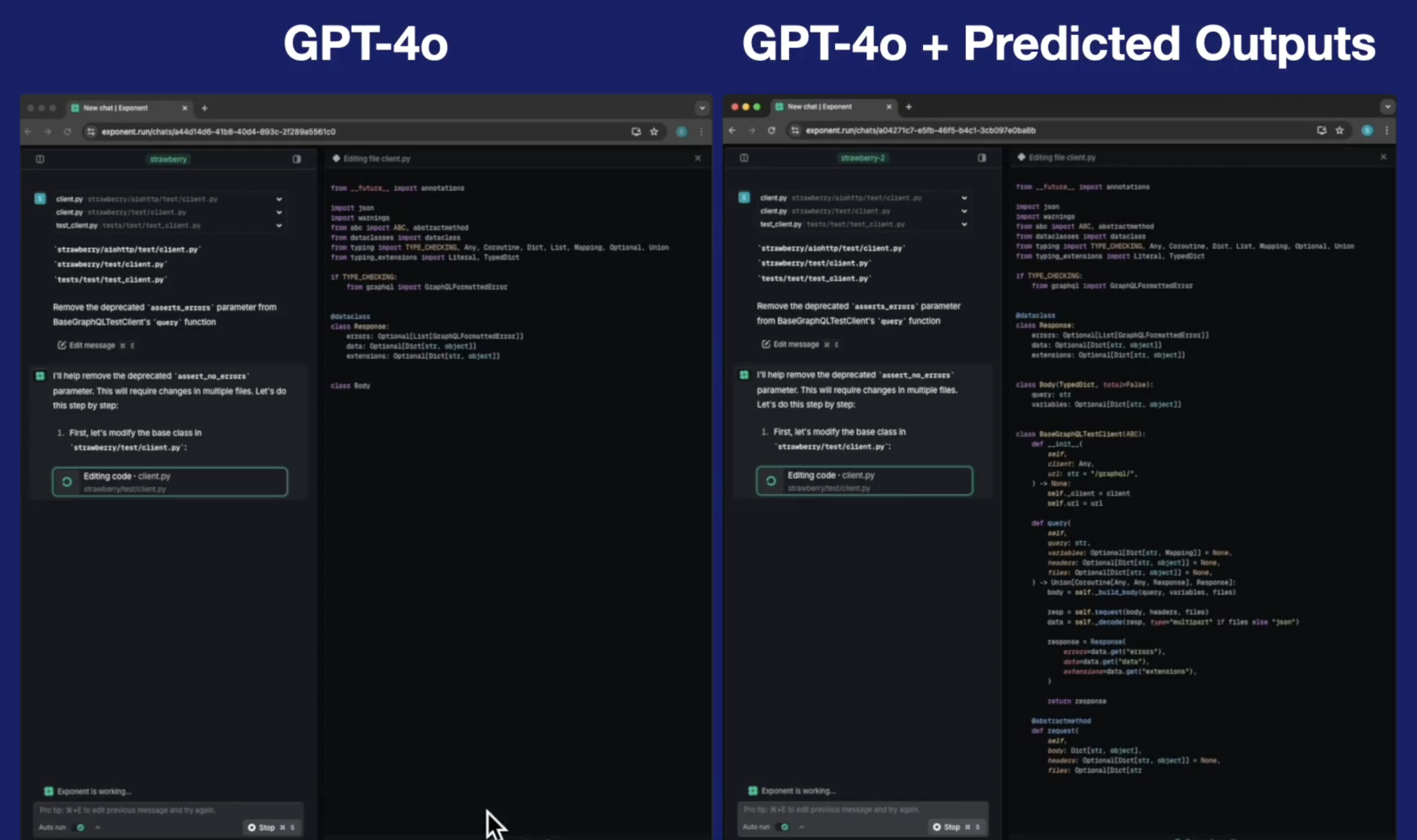

Introducing Predicted Outputs

OpenAI’s new Predicted Outputs feature addresses this latency issue effectively. It provides a reference string that helps the model predict content, allowing it to skip unnecessary processing. This innovation can reduce response time by up to five times, making GPT-4o much more efficient for real-time tasks like document updates and code editing. This is especially valuable for developers and content creators who need quick updates without interruptions.

How It Works

Predicted Outputs use a method called speculative decoding. Instead of generating text word by word, the model can skip over predictable parts and jump straight to the crucial sections that need attention. This means users can iterate quickly on previous responses, enhancing collaboration on live documents, speeding up code refactoring, and allowing for real-time updates of articles. This efficiency also reduces costs by lessening the burden on infrastructure.

Why This Matters

The reduction in latency provided by Predicted Outputs is crucial for real-world applications. Developers can work faster with AI tools for rewriting code, and content creators can update their work in real-time without delays. Testing has shown that GPT-4o can perform tasks like document editing and code rewriting up to 5x faster, making it more accessible and practical for a wide range of users, including developers, writers, and educators.

Conclusion

The launch of the Predicted Outputs feature marks a significant improvement in addressing latency in language models. With speculative decoding, tasks like document editing and code refactoring become much quicker and more user-friendly. This enhancement allows users to focus on creativity and problem-solving rather than waiting for responses. It’s a valuable development for anyone looking to enhance productivity through AI.

For more details, check out the tweet. Follow us on Twitter, join our Telegram Channel, and LinkedIn Group for updates. If you enjoy our work, subscribe to our newsletter and join our 55k+ ML SubReddit.

Sponsorship Opportunity: Promote your research, product, or webinar to over 1 million monthly readers and 500k+ community members.

If you want to evolve your company with AI, consider the following steps:

- Identify Automation Opportunities: Find key customer interaction points that can benefit from AI.

- Define KPIs: Ensure your AI efforts have measurable impacts on business outcomes.

- Select an AI Solution: Choose tools that fit your needs and allow for customization.

- Implement Gradually: Start with a pilot project, gather data, and expand AI usage wisely.

For AI KPI management advice, connect with us at hello@itinai.com. For ongoing insights into leveraging AI, follow us on Telegram or Twitter @itinaicom.

Discover how AI can transform your sales processes and customer engagement. Explore solutions at itinai.com.