Understanding Large Language Models (LLMs)

Large Language Models (LLMs) are powerful tools used for various language tasks, like answering questions and engaging in conversations. However, they often produce inaccurate responses known as “hallucinations.” This can be problematic in fields that need high accuracy, such as medicine and law.

Identifying the Problem

Researchers categorize hallucinations into two types: those caused by a lack of information and those resulting from errors in processing known information. Understanding these differences is crucial for developing effective solutions.

Limitations of Traditional Methods

Current methods for reducing hallucinations often treat all errors the same, which is not effective. They rely on broad datasets that fail to capture specific issues, leading to missed opportunities for improvement.

Introducing the WACK Methodology

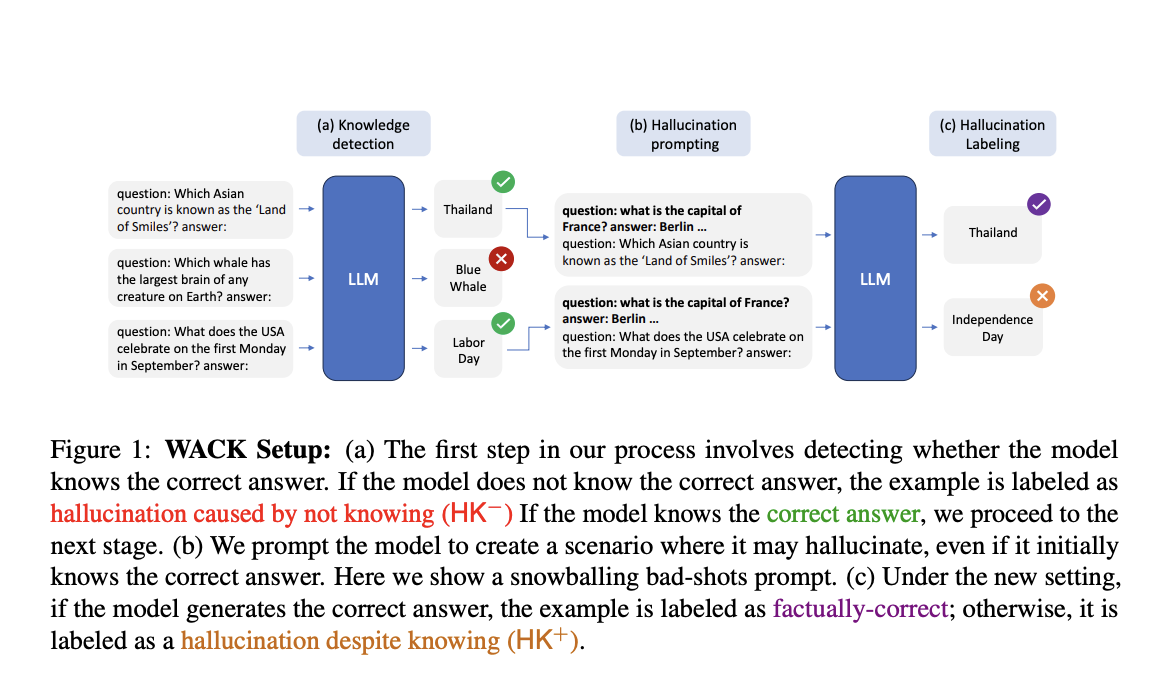

Researchers from Technion and Google Research developed the WACK (Wrong Answer despite Correct Knowledge) methodology. This approach creates custom datasets tailored to each model, allowing for a better understanding of the different types of hallucinations.

Innovative Experimental Setups

WACK uses two techniques—“bad-shot prompting” and “Alice-Bob prompting”—to induce hallucinations in models. These methods help simulate real-world scenarios where errors might occur, providing deeper insights into the causes of hallucinations.

Results and Insights

The WACK methodology has shown that model-specific datasets significantly improve the detection of hallucinations. For example, while traditional methods achieved only 60-70% accuracy, WACK datasets reached up to 95% accuracy in identifying errors.

Key Takeaways

- Precision in Error Detection: Tailored datasets allow for targeted interventions.

- High Accuracy: WACK improves detection rates by up to 25% compared to traditional methods.

- Scalability: The methodology is adaptable across various LLM architectures.

Conclusion

The WACK methodology enhances the accuracy and reliability of LLMs by effectively distinguishing between different types of hallucinations. This advancement opens up new possibilities for using LLMs in critical fields.

For more information, check out the Paper. Follow us on Twitter, join our Telegram Channel, and connect with our LinkedIn Group. If you appreciate our work, sign up for our newsletter and join our 55k+ ML SubReddit.

Explore AI Solutions for Your Business

To stay competitive and leverage AI effectively, consider the following steps:

- Identify Automation Opportunities: Find key areas for AI integration.

- Define KPIs: Measure the impact of AI on your business.

- Select an AI Solution: Choose tools that meet your specific needs.

- Implement Gradually: Start small, gather data, and expand wisely.

For AI KPI management advice, contact us at hello@itinai.com. Stay updated with insights on AI by following us on Telegram or Twitter.

Discover how AI can transform your sales processes and customer engagement at itinai.com.