Transforming Natural Language Processing with SmolLM2

Recent advancements in large language models (LLMs) like GPT-4 and Meta’s LLaMA have changed how we handle natural language tasks. However, these large models have some drawbacks, especially regarding their resource demands. They require extensive computational power and memory, making them unsuitable for devices with limited capabilities, such as smartphones. Running these models locally can be costly in terms of hardware and energy. This has created a demand for smaller, efficient models that can deliver strong performance on-device.

Introducing SmolLM2

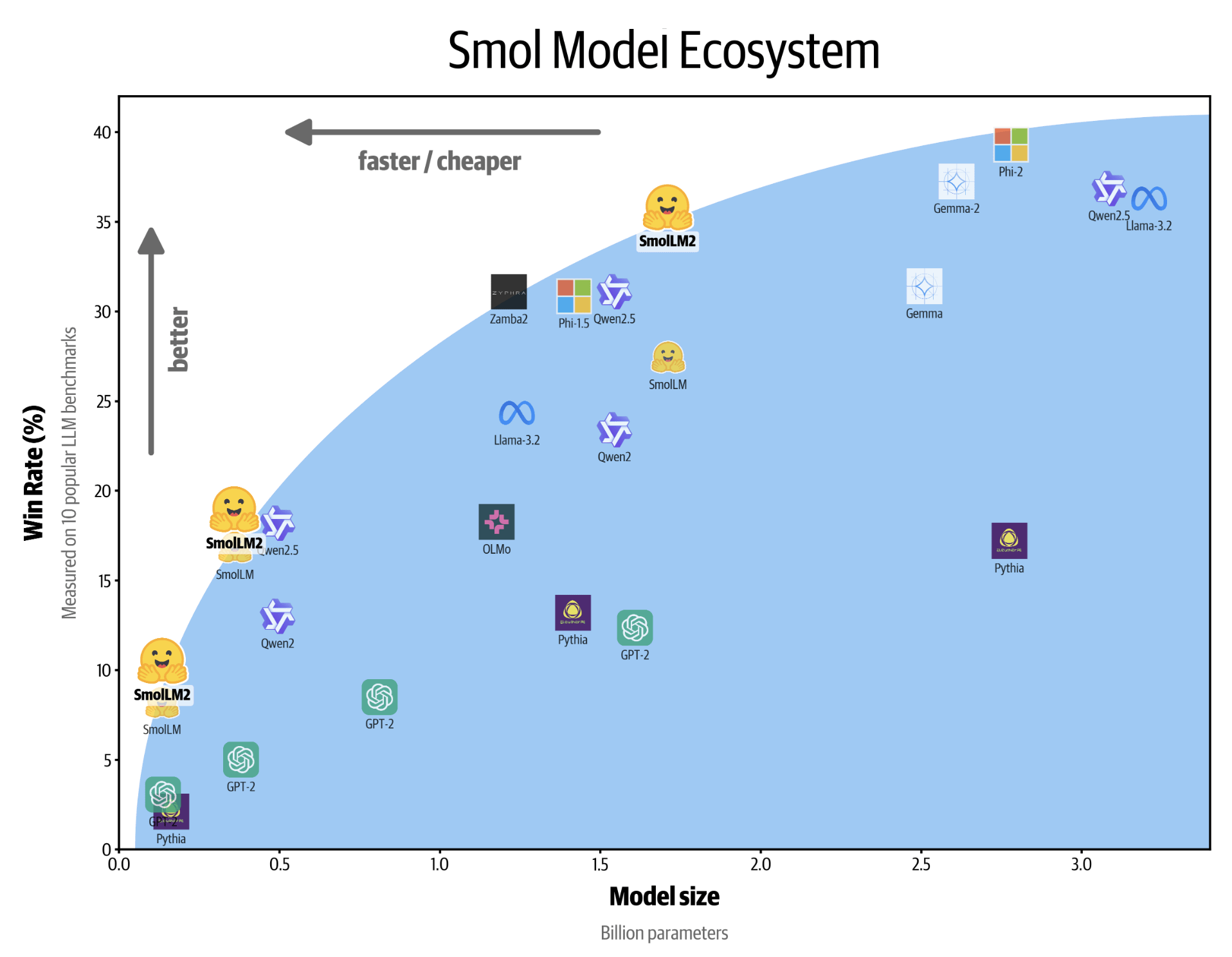

Hugging Face has addressed this need with the release of SmolLM2—a series of compact models designed for on-device applications. Building on the success of SmolLM1, SmolLM2 provides enhanced capabilities while remaining lightweight. It offers three configurations: 0.1B, 0.3B, and 1.7B parameters. The key benefit is that these models can run directly on devices, eliminating the need for large cloud-based infrastructures. This is ideal for use cases where speed, privacy, and hardware constraints are critical.

Compact and Versatile

SmolLM2 models are trained on 11 trillion tokens from diverse datasets, focusing primarily on English text. They excel in tasks like text rewriting, summarization, and function calling, making them practical for applications in environments with limited connectivity. Performance testing shows SmolLM2 outperforms Meta Llama 3.2 1B, and in certain areas, surpasses benchmarks set by Qwen2.5 1B.

Advanced Post-Training Techniques

SmolLM2 integrates advanced training methods, such as Supervised Fine-Tuning (SFT) and Direct Preference Optimization (DPO). These techniques enhance the models’ capability to follow complex instructions and deliver accurate responses. Moreover, their compatibility with frameworks like llama.cpp and Transformers.js allows for efficient on-device operations using local CPUs or browsers without requiring specialized GPUs. This flexibility makes SmolLM2 ideal for edge AI applications, prioritizing low latency and data privacy.

Significant Improvements Over SmolLM1

The release of SmolLM2 signifies progress in making powerful LLMs more accessible for various devices. Compared to SmolLM1, which had limitations in task handling and reasoning, SmolLM2 shows remarkable advancements, especially in the 1.7B parameter version. It supports a range of capabilities, including more advanced features like function calling, making it valuable for automated coding and personal AI apps.

Impressive Benchmark Results

Benchmark scores illustrate the enhancements in SmolLM2, with competitive performance often matching or exceeding that of Meta Llama 3.2 1B. Its compact structure allows for effective operation in environments where larger models fall short, making it crucial for industries concerned with infrastructure costs and the need for real-time processing.

Efficient and Versatile Solutions

SmolLM2 is designed for high performance with sizes ranging from 135 million to 1.7 billion parameters, balancing versatility and efficiency. It handles text rewriting, summarization, and complex functions while improving mathematical reasoning—making it a cost-effective choice for on-device AI. As small language models gain prominence for privacy-focused and latency-sensitive applications, SmolLM2 sets a new benchmark in on-device NLP.

Explore SmolLM2 and Let AI Transform Your Business

Discover the SmolLM2 model series and see how AI can enhance your operations. Identify automation opportunities, define measurable KPIs for your AI initiatives, select suitable solutions, and implement them gradually. For AI KPI management guidance, contact us at hello@itinai.com. For insights on leveraging AI, follow us on Telegram at t.me/itinainews or Twitter at @itinaicom.

Experience how AI can refine your sales processes and boost customer engagement. Explore our solutions at itinai.com.