Streamlining AI Model Deployment with Run AI: Model Streamer

In the fast-paced world of AI and machine learning, quickly deploying models is crucial. Data scientists often struggle with the slow loading times of trained models, whether they’re stored locally or in the cloud. These delays can hinder productivity and affect user satisfaction, especially in real-world applications where speed matters. Addressing these loading inefficiencies is essential for delivering timely insights.

Introducing Run AI: Model Streamer

Run AI has launched an open-source tool called Run AI: Model Streamer. This innovative solution significantly reduces the time required to load inference models, thus alleviating a major challenge for the AI community. By optimizing the model loading process, it enables faster and smoother deployment.

Key Features and Benefits

- Up to Six Times Faster Loading: Load models much quicker compared to traditional methods.

- Wide Compatibility: Works with local storage, cloud solutions, Amazon S3, and NFS, ensuring easy integration.

- No Format Conversion Needed: Direct compatibility with popular inference engines, such as Hugging Face, streamlines the deployment process.

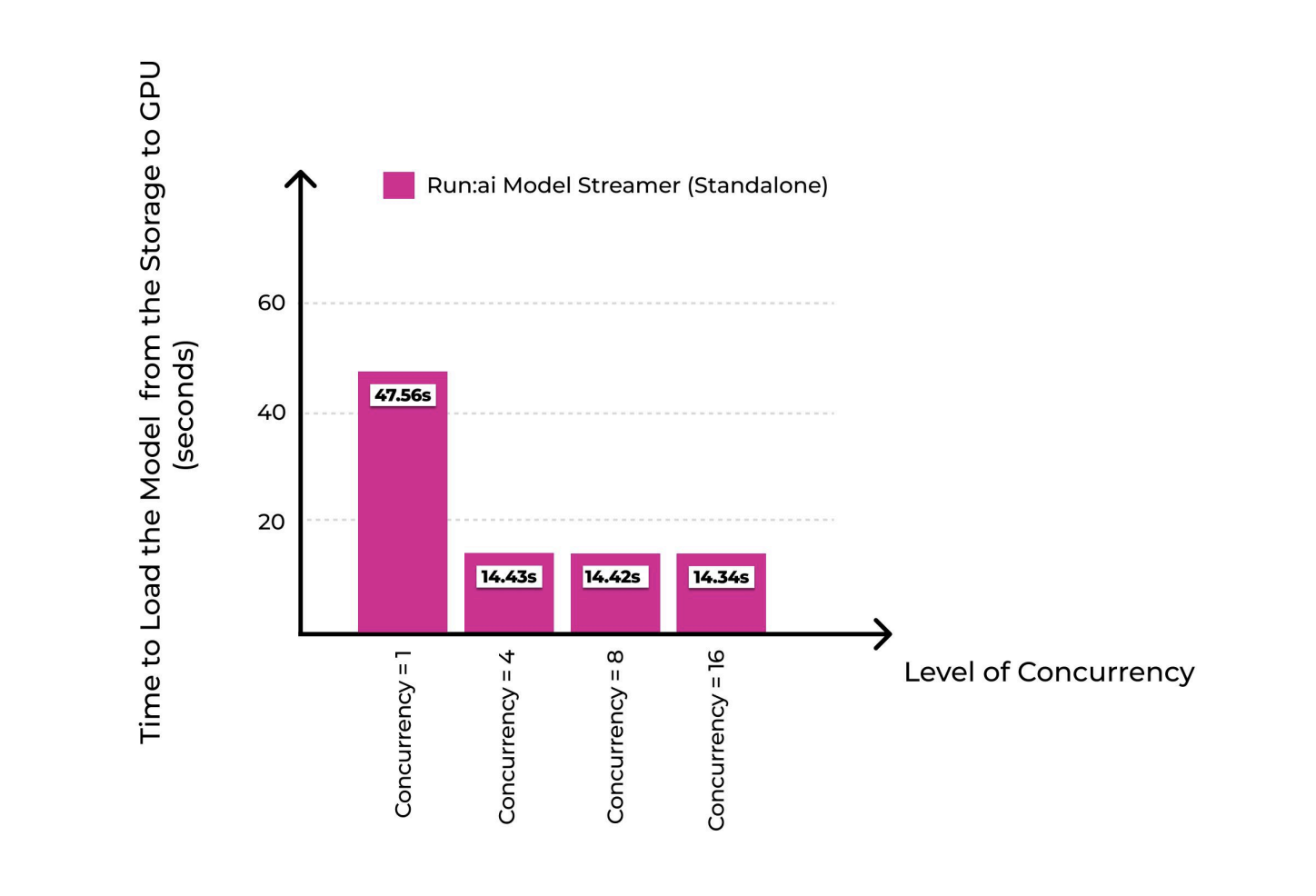

Real-World Performance Improvements

Run AI’s benchmarks show impressive speed gains. For example, loading a model from Amazon S3 drops from about 37.36 seconds to only 4.88 seconds. Similarly, loading from an SSD improves from 47 seconds to just 7.53 seconds. These enhancements are vital for applications needing fast responses, like real-time recommendations and critical diagnostics in healthcare.

Why This Matters

By solving the loading speed bottleneck, Run AI: Model Streamer improves workflow efficiency and system reliability. With its rapid loading times and seamless storage integration, this tool allows data scientists to concentrate on innovating, not troubleshooting model integration issues. Open-sourcing this tool positions Run AI as a leader in accessible and efficient AI solutions.

Get Involved and Stay Updated

Explore the Technical Report, visit our GitHub Page, and find more details. Follow us on Twitter, join our Telegram Channel, and connect through our LinkedIn Group. Subscribe to our newsletter for updates and insights, and join our 55k+ ML SubReddit.

Enhance Your Business with AI

Stay competitive by leveraging Run AI: Model Streamer. Here’s how:

- Identify Automation Opportunities: Discover areas in customer interactions that can benefit from AI.

- Define KPIs: Ensure measurable impacts from your AI initiatives.

- Select an AI Solution: Choose tools tailored to your specific needs.

- Gradual Implementation: Start with a pilot project, analyze results, and expand as needed.

For further AI KPI management advice, reach out to us at hello@itinai.com. For continuous insights, follow on Telegram or Twitter.