Understanding Generative AI Models

Generative artificial intelligence (AI) models create realistic and high-quality data like images, audio, and video. They learn from large datasets to produce synthetic content that closely resembles original samples. One popular type of these models is the diffusion model, which generates images and videos by reversing a noise process to achieve high-quality outputs.

Challenges with Diffusion Models

However, diffusion models often need many steps to produce results, which requires a lot of computational power and time. This is a significant drawback in scenarios that require quick outputs or involve generating multiple samples at once.

Continuous-Time Models

Diffusion models face stability issues during training, making them hard to scale and use with complicated datasets. To overcome these issues, researchers are exploring continuous-time diffusion models, which reduce sampling errors by eliminating time discretization. Yet, these models have not gained much traction due to their training instabilities.

Improving Efficiency in Diffusion Models

Researchers have introduced new methods to enhance the efficiency of diffusion models, such as direct distillation and adversarial distillation. While these approaches can speed up sampling and improve quality, they also come with high resource demands and complexities.

Introducing TrigFlow

A research team from OpenAI has developed TrigFlow, a framework aimed at enhancing continuous-time consistency models. TrigFlow addresses training instability and improves computational efficiency.

Key Features of TrigFlow

- Stability: Ensures consistent training for continuous-time models.

- Scalability: Handles up to 1.5 billion parameters effectively, making it viable for high-resolution data generation.

- Efficient Sampling: Achieves high-quality results in just two sampling steps, comparable to models requiring many more steps.

- Computational Efficiency: Reduces resource demands through adaptive weighting and simplified processes.

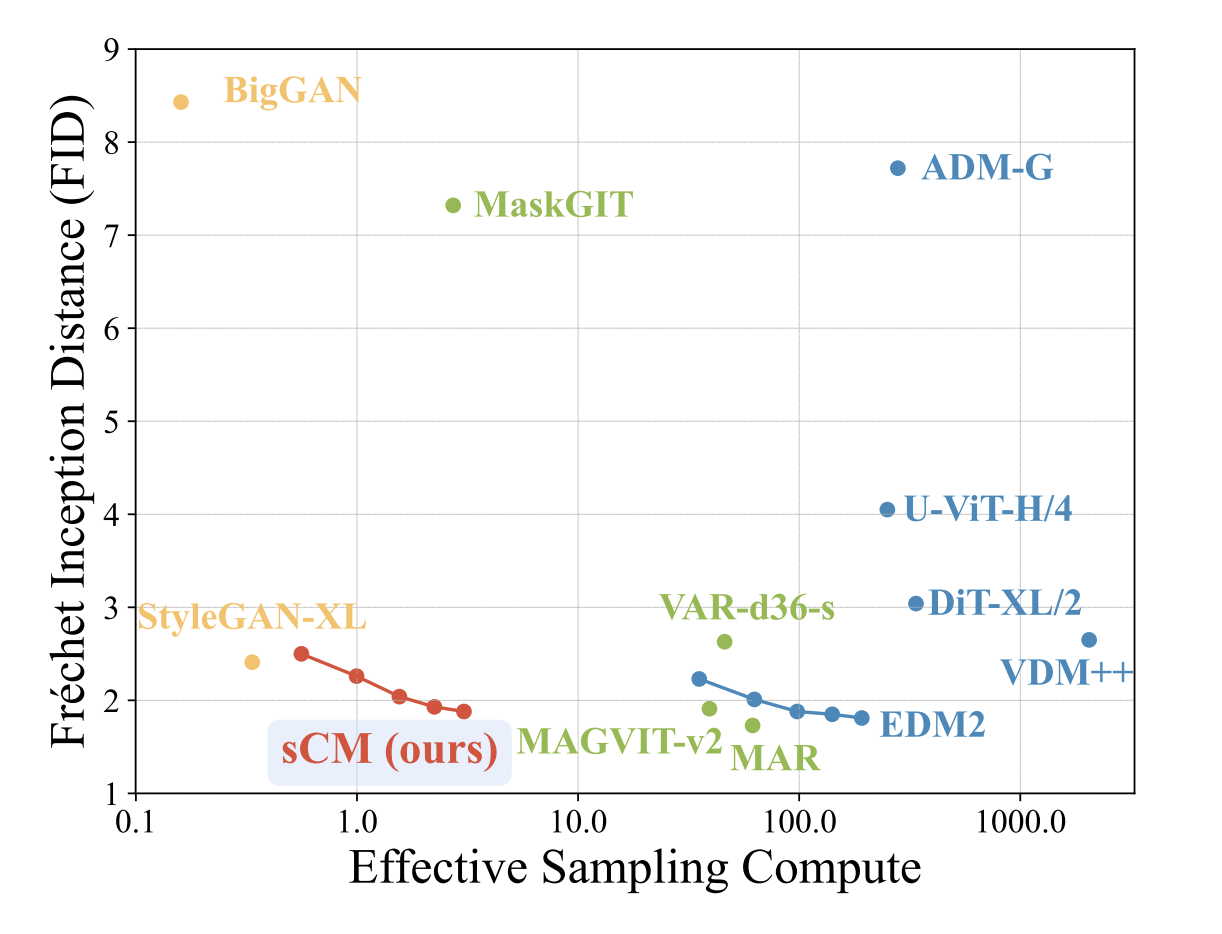

Measurable Results

The model, named sCM, showed impressive scores such as a Fréchet Inception Distance (FID) of 2.06 on CIFAR-10 and 1.48 on ImageNet 64×64, demonstrating significantly improved sampling efficiency.

Conclusion

The research highlights how TrigFlow advances generative model training by improving stability, scalability, and sampling efficiency. This framework presents a robust solution, challenging existing diffusion models while reducing computational requirements.

Stay Updated and Get Involved

Check out the full paper for more details. Follow us on Twitter, join our Telegram Channel, and connect on LinkedIn. If you enjoyed this research, subscribe to our newsletter and join our 55k+ ML SubReddit community.

Upcoming Webinar

Join us on Oct 29, 2024, for a live webinar titled The Best Platform for Serving Fine-Tuned Models: Predibase Inference Engine.

Transform Your Business with AI

Explore how AI can revolutionize your operations:

- Identify Automation Opportunities: Find key areas for AI integration.

- Define KPIs: Measure the impact of your AI initiatives.

- Select AI Solutions: Choose customizable tools that fit your needs.

- Implement Gradually: Start small, learn, and expand.

For AI KPI management advice, contact us at hello@itinai.com. For ongoing insights on AI, follow us on Telegram or Twitter.