Understanding Workflow Generation in Large Language Models

Large Language Models (LLMs) are powerful tools for solving complicated problems, including functions, planning, and coding.

Key Features of LLMs:

- Breaking Down Problems: They can split complex problems into smaller, manageable tasks, known as workflows.

- Improved Debugging: Workflows help in understanding processes better, making it easier to identify errors.

- Reducing Errors: By using workflows, LLMs can avoid common mistakes.

Current Challenges:

- Narrow Focus: Most evaluations only consider function calls and ignore real-world complexities.

- Limited Structure: Many evaluations focus on simple sequences rather than the complex, interconnected tasks found in real scenarios.

- Reliance on Specific Models: Current tests mostly depend on models like GPT-3.5/4, limiting broader assessments.

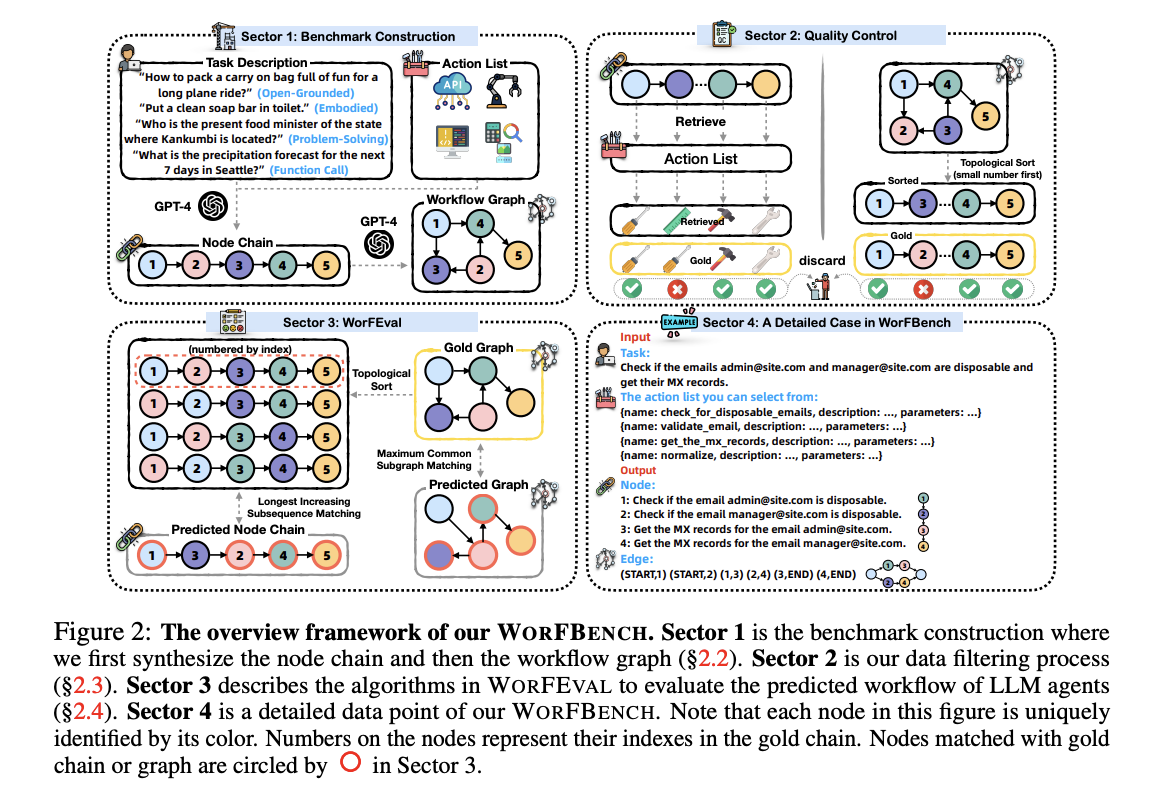

Introducing WORFBENCH

WORFBENCH is a new benchmark designed to evaluate how well LLMs can generate workflows. This approach improves on past methods by:

- Using diverse scenarios and complex task structures.

- Employing rigorous data filtering and human evaluations.

WORFEVAL Evaluation Protocol:

This protocol uses advanced matching algorithms to assess how well LLMs create workflows with both sequences and graphs. Tests show notable differences in performance, emphasizing the need for improved planning capabilities.

Performance Insights

Analysis indicates significant gaps in how well LLMs handle linear versus graph-based tasks:

- GLM-4-9B showed a 20.05% performance gap.

- Even the top model, Llama-3.1-70B, had a 15.01% difference in scores.

- GPT-4 achieved only 67.32% in sequence tasks and 52.47% in graph tasks, highlighting the challenges of more complex workflows.

Common Issues in Low-Performance Samples:

- Insufficient task details.

- Unclear subtask definitions.

- Incorrect workflow structures.

- Non-compliance with expected formats.

Conclusion and Future Directions

WORFBENCH offers a framework for better evaluating how LLMs generate workflows. The findings reveal significant gaps in performance that need addressing for future improvements in AI models.

While this method ensures quality in workflow generation, there are still limitations. Some queries may not meet quality standards, and the current approach assumes that all nodes need to be traversed to complete a task.

Stay Connected

For more insights, follow us on Twitter, join our Telegram Channel, and become part of our LinkedIn Group. If you appreciate our work, you will love our newsletter. Also, don’t miss our 55k+ ML SubReddit.

Upcoming Live Webinar

Join us on Oct 29, 2024, to learn about the best platform for serving fine-tuned models: Predibase Inference Engine.

Enhancing Your Business with AI

To stay competitive in today’s market, utilize WORFBENCH for workflow evaluation in your AI strategies:

- Identify Automation Opportunities: Find customer interaction points that can benefit from AI.

- Define KPIs: Ensure your AI projects have measurable impacts.

- Select the Right AI Solution: Choose tools that fit your business needs.

- Implement Gradually: Start with a pilot project, gather data, and expand usage.

For assistance with AI KPI management, contact us at hello@itinai.com. For ongoing insights, keep in touch via our Telegram and Twitter channels.