Understanding Controllable Safety Alignment (CoSA)

Why Safety in AI Matters

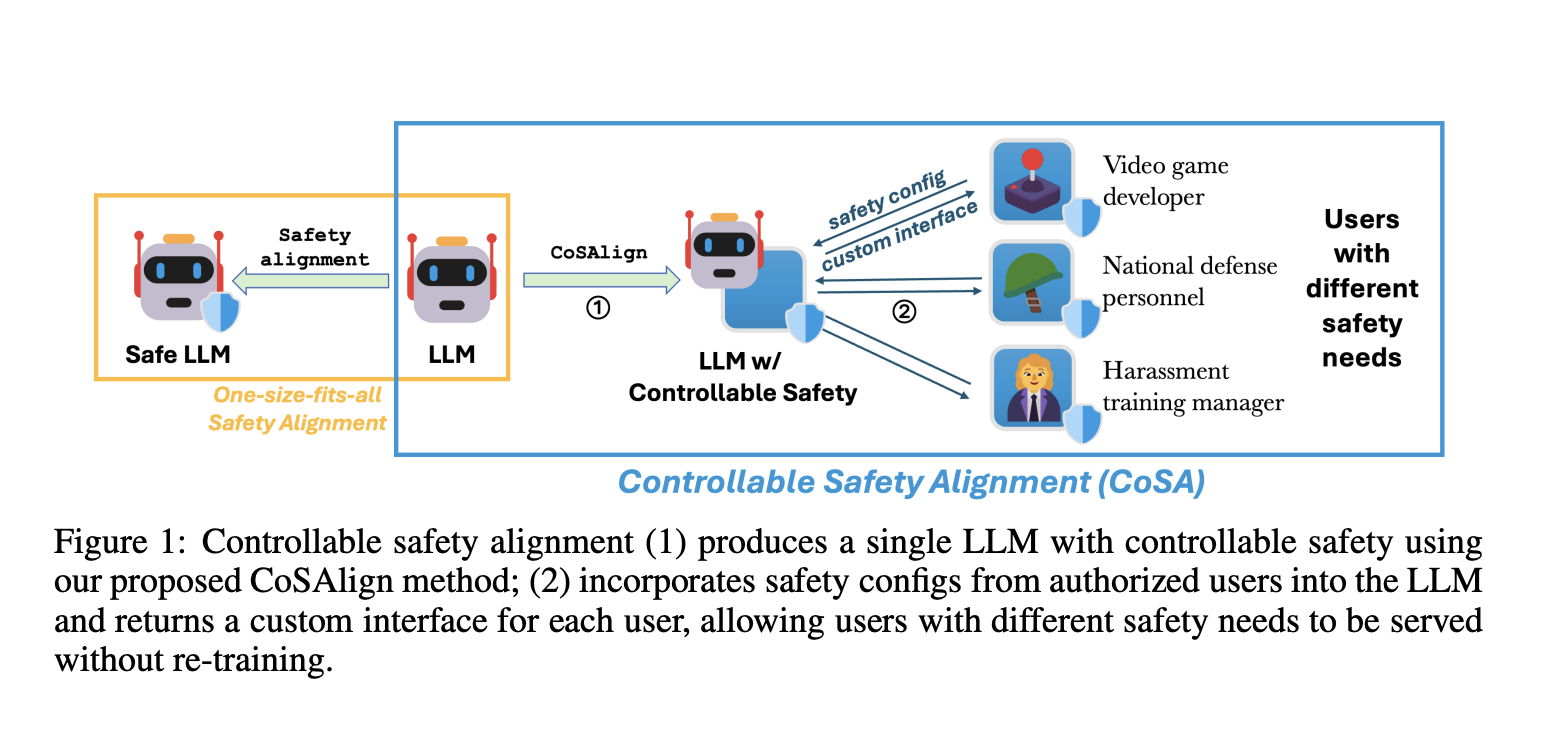

As large language models (LLMs) improve, ensuring their safety is crucial. Providers typically set rules for these models to follow, aiming for consistency. However, this “one-size-fits-all” approach often overlooks cultural differences and individual user needs.

The Limitations of Current Safety Approaches

Current methods rely on fixed safety principles, which can be too rigid. Users have diverse safety requirements, making static rules ineffective and costly to change. This lack of flexibility can hinder the model’s usefulness across different cultures and applications.

Introducing Controllable Safety Alignment (CoSA)

Researchers from Microsoft and Johns Hopkins University developed CoSA, a framework that allows models to adapt to various safety needs without needing retraining.

How CoSA Works

– **Safety Configurations**: Models are tailored to follow specific safety guidelines set by trusted experts.

– **Adaptability**: The model can change its safety settings in real-time, making it more responsive to user needs.

– **User-Friendly Access**: Customized models can be accessed through special interfaces, enhancing usability.

Evaluating Safety with CoSApien

CoSA includes a new evaluation method using CoSApien, a dataset designed to mimic real-world safety scenarios. It categorizes responses into three groups: allowed, disallowed, and mixed, ensuring comprehensive safety assessments.

Improving Model Control with CoSAlign

CoSAlign enhances the controllability of model safety by:

– **Creating Risk Categories**: It identifies different risk levels from training prompts.

– **Preference Optimization**: The method improves the model’s ability to manage safety configurations effectively.

Benefits of CoSAlign

– **Higher CoSA-Scores**: CoSAlign outperforms existing methods, leading to more helpful and safe responses.

– **Robust Performance**: Evaluations show CoSAlign consistently delivers better results, even with new safety configurations.

Conclusion

CoSA represents a significant advancement in AI safety, allowing for real-time adjustments without retraining. This framework promotes better representation of diverse human values, enhancing the practicality of LLMs.

Get Involved

Explore the research paper for more details. Follow us on Twitter, join our Telegram Channel, and connect on LinkedIn. If you appreciate our work, subscribe to our newsletter and join our 50k+ ML SubReddit community.

Upcoming Webinar

Join us on October 29, 2024, for a live webinar on the best platform for serving fine-tuned models: the Predibase Inference Engine.

Transform Your Business with AI

Leverage Controllable Safety Alignment (CoSA) to stay competitive. Discover how AI can enhance your operations by:

– Identifying automation opportunities

– Defining measurable KPIs

– Selecting tailored AI solutions

– Implementing gradually for effective results

For AI KPI management advice, reach out to us at hello@itinai.com. Stay updated on AI insights through our Telegram channel or Twitter. Explore more at itinai.com.