Vision-Language-Action Models (VLA) for Robotics

VLA models combine large language models with vision encoders and are fine-tuned on robot datasets. This enables robots to understand new instructions and recognize unfamiliar objects. However, most robot datasets require human control, making it hard to scale. In contrast, using Internet video data offers more examples of human actions and interactions, which can improve scalability.

Challenges with Internet Videos

Learning from online videos is challenging because:

- Most videos lack clear labels for actions.

- Video contexts often differ from the environments where robots operate.

Advancements in Vision-Language Models (VLMs)

VLMs trained on large datasets of text, images, and videos can understand and generate both text and multimodal data. By adding auxiliary tasks, the performance during training has improved. Yet, these methods still depend on labeled action data, which limits the scalability of developing general VLAs.

Training Robot Policies from Videos

Using videos rich in dynamics and behavior can help robots learn better. Some recent studies use generative models trained on human videos to enhance robotic tasks. However, current methods often need specific human-robot data or are too task-specific.

LAPA: A New Approach

Researchers from various institutions introduced Latent Action Pre Training for General Action models (LAPA). This unsupervised method utilizes internet-scale videos without labeled robot actions.

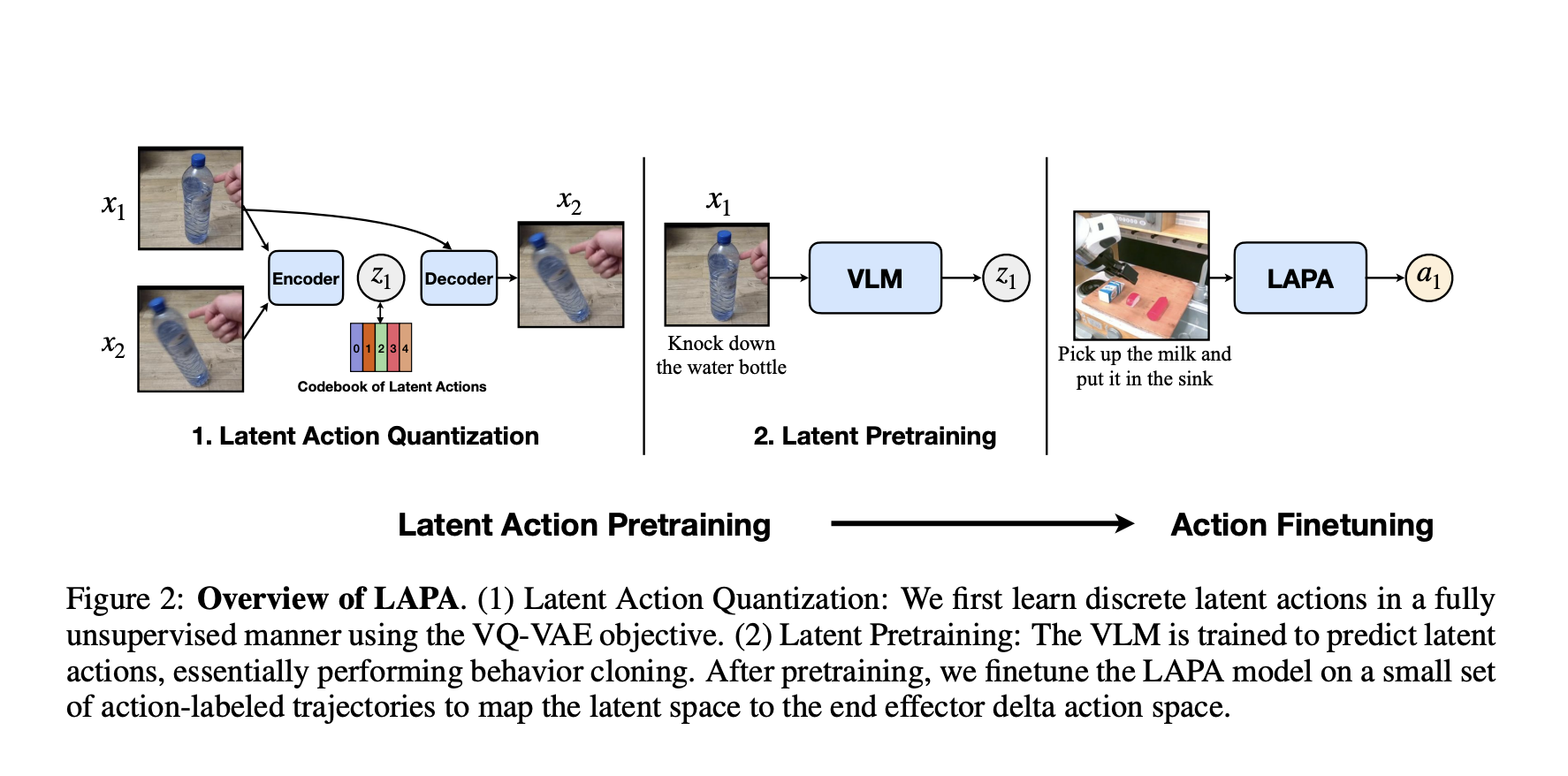

How LAPA Works

LAPA includes:

- **First Stage**: Using a VQ-VAE-based method to break actions into smaller parts.

- **Second Stage**: A Vision-Language Model predicts latent actions from video and task descriptions, followed by fine-tuning on a small robot dataset.

Key Benefits of LAPA

LAPA outperforms previous models like OPENVLA, achieving:

- Better efficiency, using only 272 H100 hours vs. 21,500 A100-hours.

- Improved performance in real-world tasks requiring language conditioning and generalization.

Conclusion and Future Opportunities

LAPA is a scalable pre-training method for VLAs, demonstrating improved transfer to various tasks. Although LAPA shows limitations in fine-grained motion tasks, it offers significant advancements in robotic performance.

Future Directions

Potential areas for improvement include:

- Expanding latent action generation for better fine-grained motion tasks.

- Implementing hierarchical architectures to reduce latency during real-time inference.

Discover More

For more details, check out the Paper, Model Card on HuggingFace, and Project Page. Follow us on Twitter, join our Telegram Channel, and be part of our LinkedIn Group.

For AI advancement opportunities and insights, connect with us at hello@itinai.com or follow us on Telegram and Twitter.

Upcoming Live Webinar

Oct 29, 2024 – Learn about the best platform for serving fine-tuned models: Predibase Inference Engine.