Revolutionizing AI Efficiency with Self-Data Distilled Fine-Tuning

Introduction to Large Language Models

Large language models (LLMs) like GPT-4, Gemini, and Llama 3 have transformed natural language processing. However, training and using these models can be expensive due to high computational demands.

The Challenge of Pruning

Structured pruning is a technique aimed at making LLMs more efficient by removing less important parts. Yet, it can lead to problems, such as reduced accuracy, especially in complex reasoning tasks. Pruning might disrupt how information flows in the model, resulting in a drop in quality.

Solutions for Improving LLM Efficiency

Several strategies exist to enhance the efficiency of LLMs:

– **Model Compression**: Reducing model complexity through pruning can inadvertently affect performance.

– **Knowledge Distillation (KD)**: Smaller models learn from larger ones, but this can lead to “catastrophic forgetting,” where the model forgets what it learned before.

– **Regularization Techniques**: Methods like Elastic Weight Consolidation attempt to minimize forgetting, though they have their own challenges.

Innovative Approach by Cerebras Systems

A team at Cerebras Systems introduced **self-data distilled fine-tuning**. This method uses the original, unpruned model to create a new dataset that retains important information and minimizes forgetting. Key benefits include:

– **Increased Accuracy**: Up to an 8% improvement on the HuggingFace OpenLLM Leaderboard.

– **Scalability**: Works well across various datasets; larger datasets enhance the model’s quality.

Methodology Highlights

The approach involves:

– Evaluating the importance of different layers in the model.

– Using fine-tuning strategies tailored for complex reasoning tasks.

– Comparing the effectiveness of various model pruning techniques.

Results and Findings

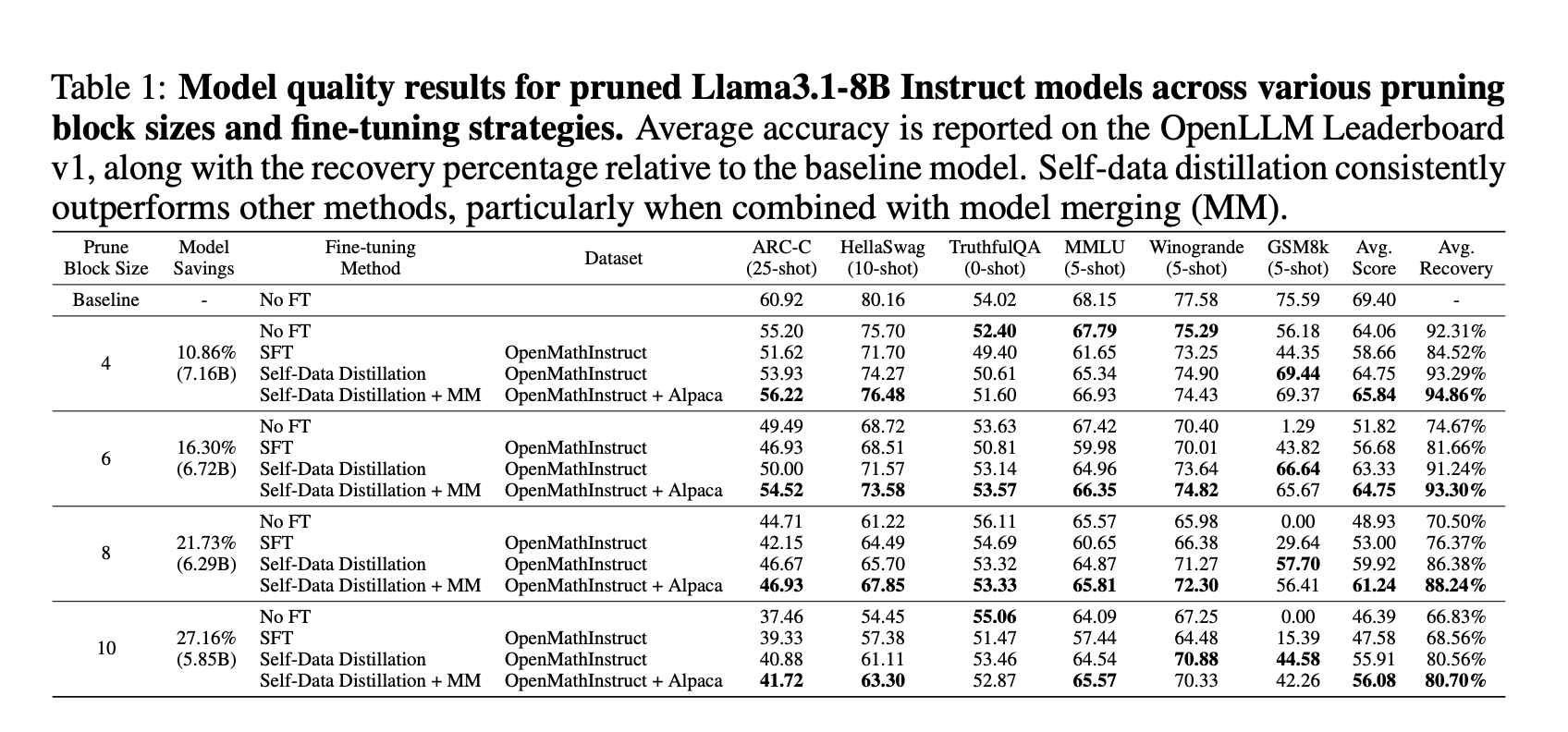

The team tested the Llama3.1-8B Instruct models with different fine-tuning strategies. Key outcomes showed:

– Models without fine-tuning lost significant accuracy.

– Standard fine-tuning improved performance but struggled with reasoning-heavy tasks.

– Self-data distilled fine-tuning excelled, achieving a recovery rate of 91.24%.

Conclusion and Future Prospects

Self-data distilled fine-tuning proves to be a vital method for maintaining model quality after pruning, outperforming standard fine-tuning approaches. Future directions aim to integrate this technique with other compression methods and explore multi-modal inputs, enhancing next-generation LLMs.

Stay Connected

Check out the research paper and follow us on social media for more insights into AI advancements. If your company aims to leverage AI, consider using self-data distilled fine-tuning to stay competitive.

Explore AI Solutions

– **Identify Automation Opportunities**: Discover where AI can improve customer interactions.

– **Define KPIs**: Make sure AI projects have measurable results.

– **Select AI Tools**: Choose solutions that meet your specific needs.

– **Implement Gradually**: Start with small projects, gather insights, and expand wisely.

For collaboration and insights, reach out to us or follow our updates online!