Understanding Local Rank and Information Compression in Deep Neural Networks

What is Local Rank?

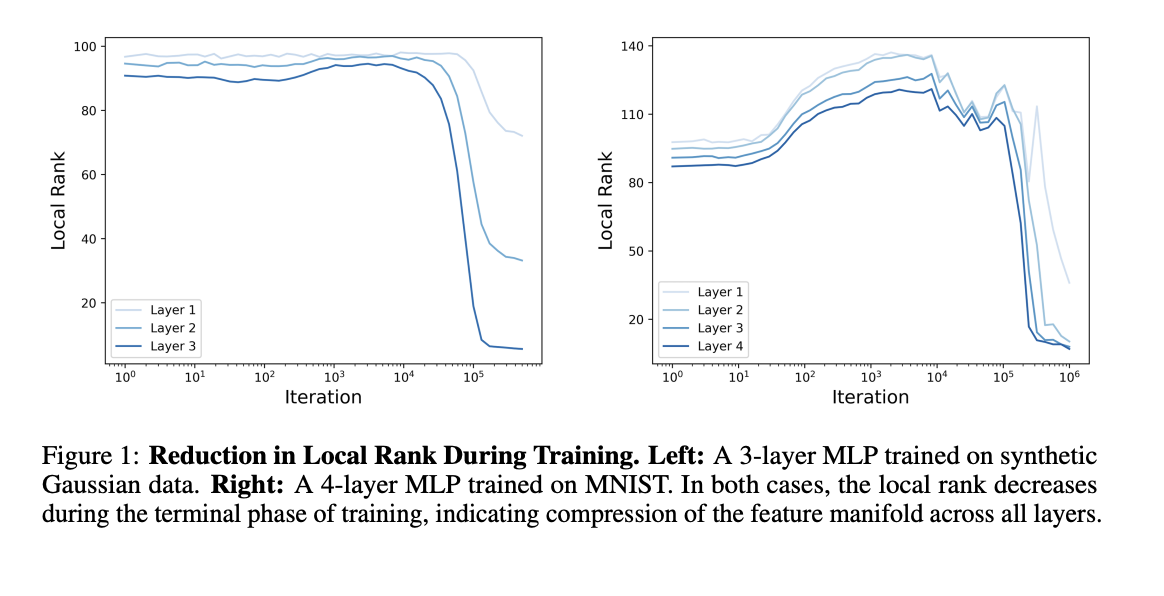

Local rank is a new metric that helps measure how effectively deep neural networks compress data. It shows the true number of feature dimensions in each layer of the network as training progresses.

Key Findings

Research from UCLA and NYU reveals that as a neural network trains, especially in the final stages, the local rank decreases. This indicates that the network is compressing the data it has learned. The study combines both theoretical analysis and real-world evidence.

Why is This Important?

This research links local rank to the Information Bottleneck framework, which helps us understand how networks learn and generalize. A lower local rank suggests that the network is effectively reducing the complexity of the data it handles, making it easier to classify or predict outcomes.

Practical Applications

- Model Compression: Understanding local rank can lead to better model compression techniques, making AI applications more efficient.

- Improved Generalization: By focusing on local rank, we can enhance how well models perform on new, unseen data.

- Automation Opportunities: Identify areas in customer interactions that can benefit from AI solutions.

Next Steps for Businesses

To leverage these insights in your organization:

- Define KPIs: Ensure that your AI initiatives have measurable impacts on your business outcomes.

- Select AI Solutions: Choose tools that meet your specific needs and allow for customization.

- Implement Gradually: Start with pilot projects, gather data, and scale AI adoption carefully.

Stay Connected

For more insights and updates, follow us on Twitter, join our Telegram Channel, or connect on LinkedIn. If you want tailored advice for AI KPI management, reach out to us at hello@itinai.com.

Discover how AI can transform your sales processes and enhance customer engagement by exploring solutions at itinai.com.

Check out the Research Paper

All credit for this research goes to the dedicated researchers involved. For a deeper understanding, check out the paper linked above.