Recent Advancements in AI and Multimodal Models

Large Language Models (LLMs) have transformed the AI landscape, leading to the development of Multimodal Large Language Models (MLLMs). These models can process not just text but also images, audio, and video, enhancing AI’s capabilities significantly.

Challenges with Current Open-Source Solutions

Despite the progress of MLLMs, many open-source options struggle with multimodal capabilities and user interactions. While models like GPT-4o excel in these areas, there is a need for high-performing open-source alternatives.

Emerging Open-Source Models

Open-source MLLMs, such as LLaMA and Baichuan, have shown great potential, thanks to efforts from academia and industry. These models focus on natural language processing and can generate text effectively. Vision-Language Models (VLMs) and Audio-Language Models (ALMs) are also making strides in handling visual and audio data respectively.

Introducing Baichuan-Omni

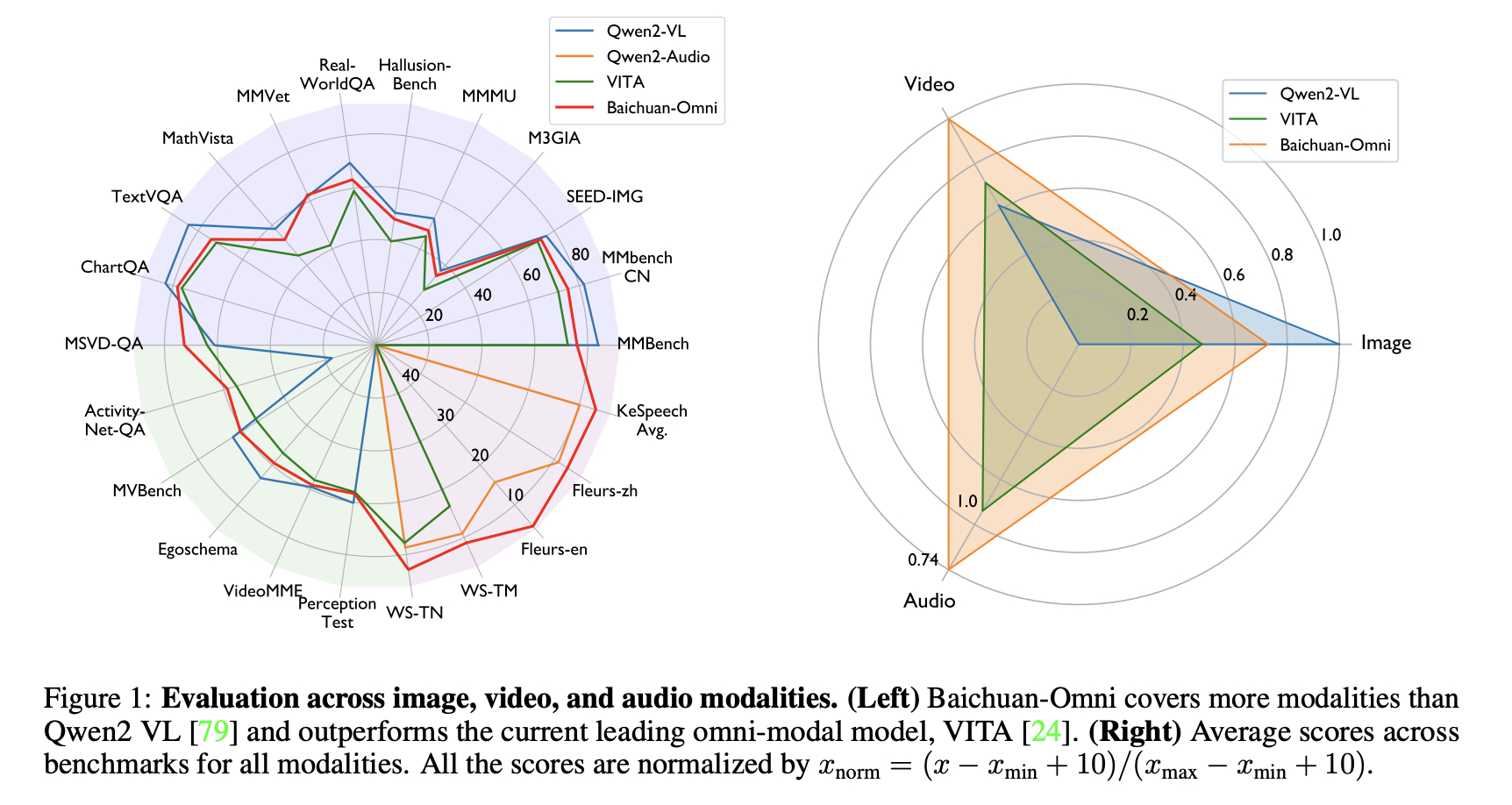

To address the limitations of existing models, researchers have developed Baichuan-Omni. This open-source model can process audio, images, videos, and text simultaneously, providing a comprehensive solution.

Key Features of Baichuan-Omni

- Omni-Modal Training: Baichuan-Omni utilizes a unique training scheme that enhances its ability to handle multiple data types and improves user interactions.

- Multilingual Support: The model supports languages like English and Chinese, catering to a wider audience.

- Comprehensive Data Usage: It is trained on diverse datasets, including text, images, videos, and audio, to ensure robust performance.

- Advanced Task Performance: Baichuan-Omni excels in tasks such as speech recognition and video understanding, outperforming many leading models.

Future Improvements

While Baichuan-Omni shows impressive capabilities, there is still room for enhancement in areas like text extraction, video understanding, and environmental sound recognition.

Conclusion

The Baichuan-Omni model represents a significant step toward creating a fully integrated omni-modal LLM, capable of processing all human senses. Its high-quality training data and innovative design make it a valuable resource for the open-source community.

Get Involved and Stay Updated

Explore the research paper and GitHub for more details. Follow us on Twitter, join our Telegram Channel, and connect on LinkedIn for updates. Sign up for our newsletter, and don’t miss out on our growing ML SubReddit community.

Transform Your Business with AI

Consider using Baichuan-Omni to enhance your company’s AI capabilities. Here are practical steps to integrate AI:

- Identify Automation Opportunities: Find customer interaction points where AI can add value.

- Define KPIs: Ensure measurable impacts from your AI initiatives.

- Select an AI Solution: Choose tools that meet your needs and allow for customization.

- Implement Gradually: Start with pilot projects, gather data, and expand AI usage carefully.

For AI KPI management advice, reach out to us at hello@itinai.com. For ongoing insights into AI, follow us on Telegram and @itinaicom.