The Rise of Large Language Models (LLMs)

Large Language Models (LLMs) have advanced rapidly, showcasing remarkable abilities. However, they also face challenges such as high resource use and scalability issues. LLMs typically need powerful GPU infrastructure and consume a lot of energy, making them expensive to use. This limits access for smaller businesses and individual users who lack advanced hardware. Additionally, the energy consumption raises sustainability concerns, highlighting the urgent need for efficient, CPU-friendly solutions.

Introducing bitnet.cpp

Microsoft has open-sourced bitnet.cpp, a highly efficient framework for running 1-bit LLMs directly on CPUs. This innovation allows even large models, like those with 100 billion parameters, to run on local devices without GPUs. With bitnet.cpp, users can experience speed improvements of up to 6.17x and reduce energy consumption by 82.2%. This makes LLMs more accessible and affordable for individuals and small businesses, allowing them to leverage AI technology without the high costs of specialized hardware.

Technical Advantages of bitnet.cpp

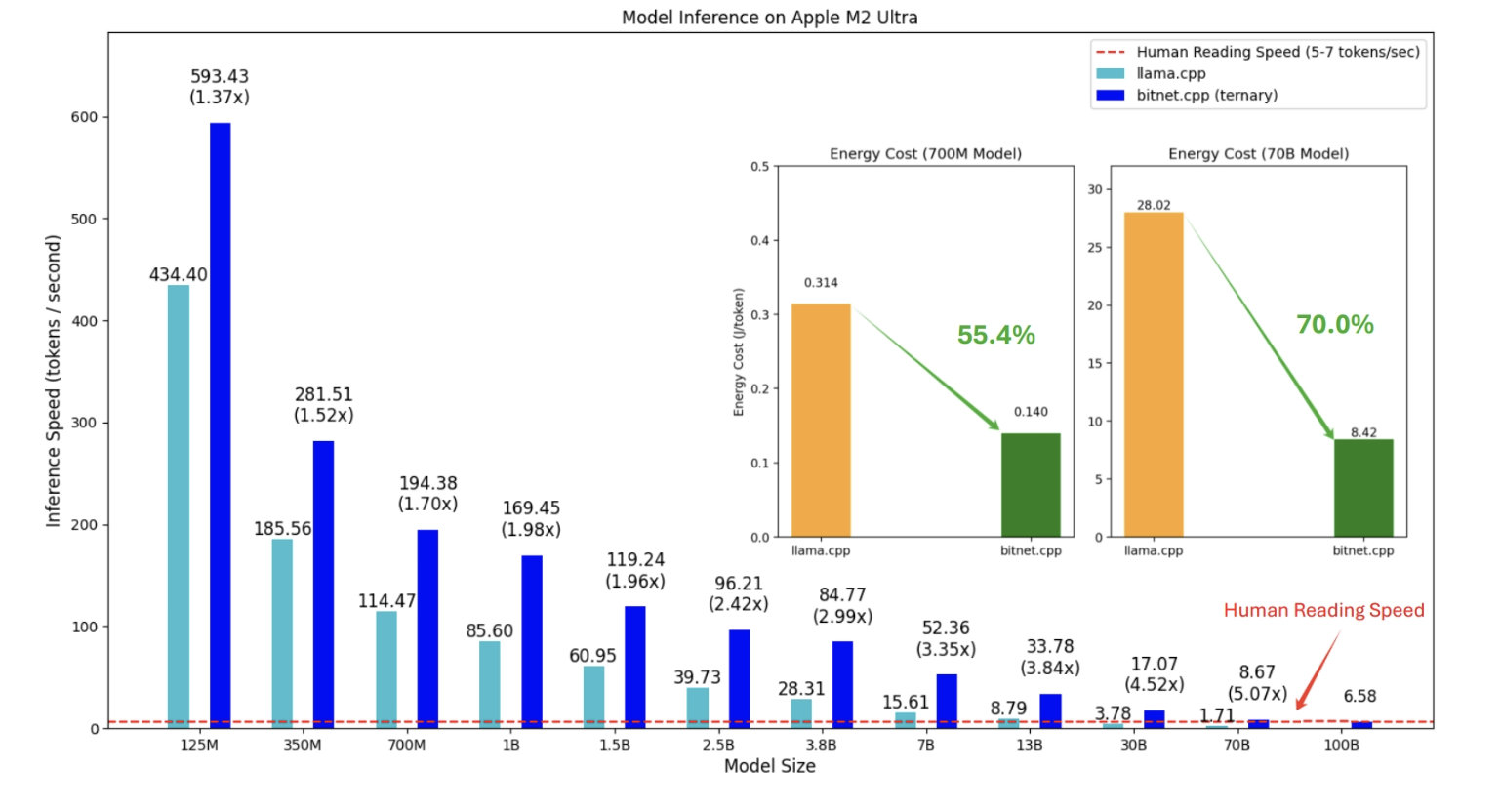

bitnet.cpp is designed for efficient computation of 1-bit LLMs, including the BitNet b1.58 model. It features optimized kernels that enhance performance during CPU inference. It currently supports ARM and x86 CPUs, with plans for future compatibility with NPUs, GPUs, and mobile devices. Benchmarks show speedups of 1.37x to 5.07x on ARM CPUs and 2.37x to 6.17x on x86 CPUs, depending on model size. Energy consumption can be reduced by 55.4% to 82.2%, making the inference process much more efficient. Users can run complex models at speeds comparable to human reading rates, even on a single CPU.

Transforming the LLM Landscape

bitnet.cpp has the potential to change how LLMs are computed. It reduces reliance on expensive hardware and lays the groundwork for specialized software and hardware optimized for 1-bit LLMs. This framework demonstrates that effective inference can be achieved with minimal resources, paving the way for a new generation of local LLMs. This is especially beneficial for privacy-conscious users, as running LLMs locally minimizes data sent to external servers. Microsoft’s ongoing research and its “1-bit AI Infra” initiative further support the industrial adoption of these models, emphasizing the importance of bitnet.cpp in advancing LLM efficiency.

Conclusion: A New Era for LLM Technology

In summary, bitnet.cpp marks a significant advancement in making LLM technology more accessible, efficient, and environmentally friendly. With impressive speed improvements and reduced energy consumption, it enables the operation of large models on standard CPU hardware, eliminating the need for costly GPUs. This innovation could democratize access to LLMs and encourage local use, unlocking new opportunities for individuals and businesses. As Microsoft continues to innovate in 1-bit LLM research and infrastructure, the future for scalable and sustainable AI solutions looks bright.

For more information, check out our GitHub. All credit for this research goes to the project’s researchers. Follow us on Twitter, join our Telegram Channel, and connect with our LinkedIn Group. If you appreciate our work, you’ll love our newsletter. Join our 50k+ ML SubReddit.

Upcoming Live Webinar

Oct 29, 2024: The Best Platform for Serving Fine-Tuned Models: Predibase Inference Engine (Promoted)

If you want to enhance your company with AI, stay competitive, and leverage Microsoft’s bitnet.cpp, discover how AI can transform your work processes:

- Identify Automation Opportunities: Find customer interaction points that can benefit from AI.

- Define KPIs: Ensure your AI projects have measurable impacts on business outcomes.

- Select an AI Solution: Choose tools that fit your needs and allow for customization.

- Implement Gradually: Start with a pilot project, gather data, and expand AI usage wisely.

For AI KPI management advice, contact us at hello@itinai.com. For ongoing insights into leveraging AI, follow us on Telegram or Twitter.

Discover how AI can enhance your sales processes and customer engagement at itinai.com.