Understanding Model Merging in AI

Model merging is a key challenge in creating versatile AI systems, especially with large language models (LLMs). These models often excel in specific areas, like multilingual communication or specialized knowledge. Merging them is essential for building stronger, multi-functional AI systems. However, this process can be complex and resource-intensive, requiring expert knowledge and significant computational power.

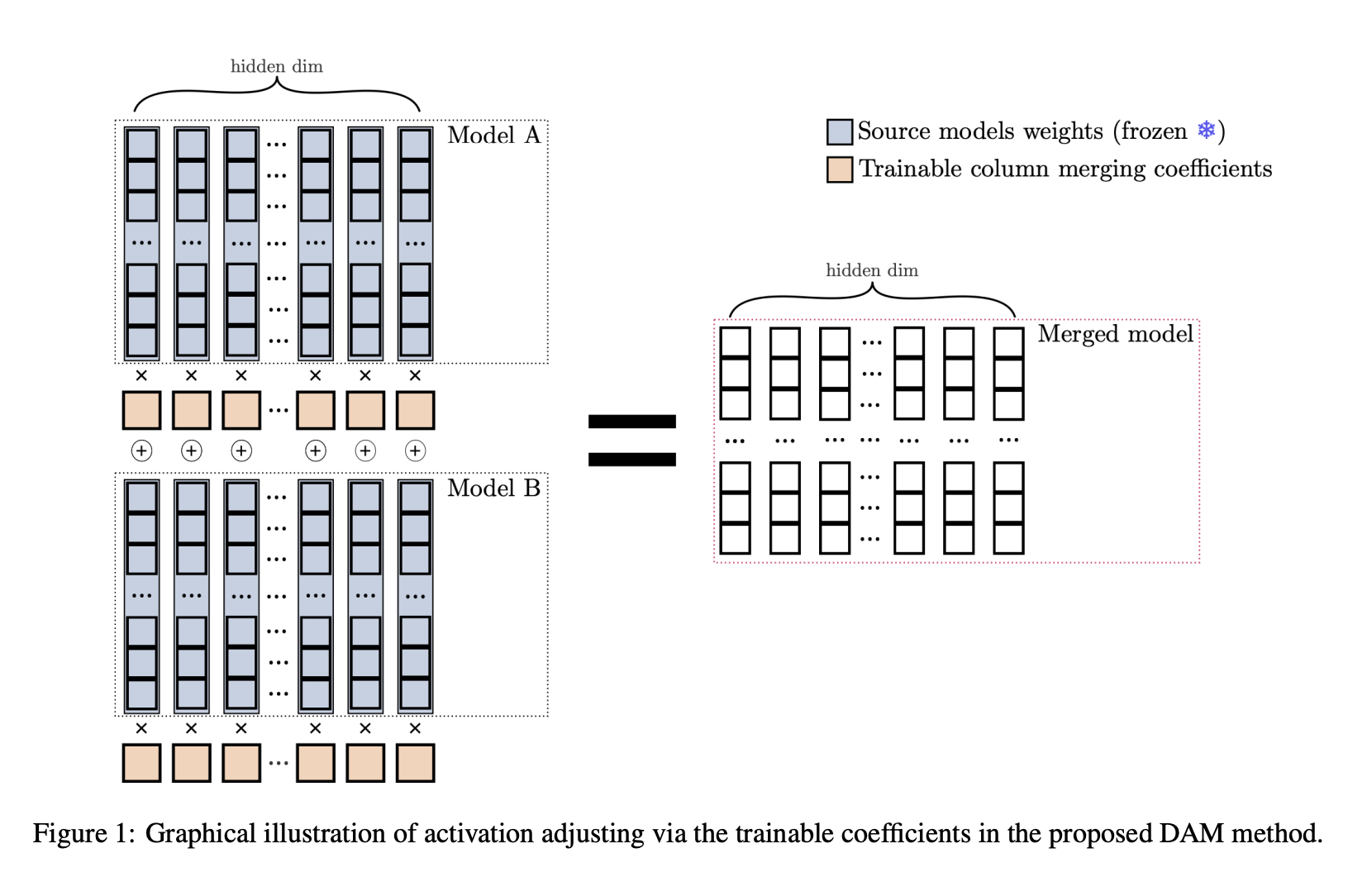

Introducing Differentiable Adaptive Merging (DAM)

Researchers from Arcee AI and Liquid AI have developed a new technique called Differentiable Adaptive Merging (DAM). This method simplifies the merging of language models, making it more efficient and less demanding on resources. DAM replaces traditional, heavy methods with a smarter approach that optimizes how models are combined.

How DAM Works

DAM merges multiple LLMs by learning the best way to combine their strengths. It adjusts the weight of each model’s contributions, ensuring the final merged model retains the best features of each. The method focuses on:

- Minimizing differences between the merged model and individual models.

- Encouraging diversity in how models are combined.

- Maintaining stability and simplicity during training.

Proven Effectiveness

Extensive testing has shown that DAM performs as well as, or better than, more complex methods like Evolutionary Merging. In specific cases, such as processing Japanese language and solving mathematical problems, DAM demonstrated excellent adaptability without the heavy computational load of other techniques.

Benefits of Using DAM

DAM offers a practical solution for merging LLMs with:

- Lower computational costs.

- Less need for manual adjustments.

- High performance across various tasks.

This research highlights that simpler methods can sometimes outperform more complex ones, proving that efficiency and scalability are crucial in AI development.

Get Involved and Learn More

To explore the full research, check out the paper. Follow us on Twitter, join our Telegram Channel, and connect with our LinkedIn Group. If you appreciate our work, subscribe to our newsletter and join our 50k+ ML SubReddit.

Upcoming Webinar

Join us on October 29, 2024, for a live webinar on the best platform for serving fine-tuned models: Predibase Inference Engine.

Transform Your Business with AI

To stay competitive, leverage Differentiable Adaptive Merging (DAM) in your organization. Here’s how:

- Identify Automation Opportunities: Find key customer interactions that can benefit from AI.

- Define KPIs: Ensure your AI initiatives have measurable impacts.

- Select an AI Solution: Choose tools that fit your needs and allow customization.

- Implement Gradually: Start with a pilot project, gather data, and expand wisely.

For AI KPI management advice, contact us at hello@itinai.com. For ongoing insights, follow us on Telegram or Twitter.

Discover how AI can enhance your sales processes and customer engagement at itinai.com.