Practical Solutions for High-Throughput Long-Context Inference

Context and Challenges in Long-Context Inference

As the use of large language models (LLMs) grows, the demand for high-throughput processing at long context lengths presents a technical challenge due to extensive memory requirements. Together AI’s research tackles this challenge by enhancing inference throughput for LLMs dealing with long input sequences and large batch sizes.

Key Innovations: MagicDec and Adaptive Sequoia Trees

Together AI introduces two critical algorithmic advancements in speculative decoding: MagicDec and Adaptive Sequoia Trees. These innovations are designed to enhance throughput under long-context and large-batch conditions.

Memory and Compute Trade-offs in Speculative Decoding

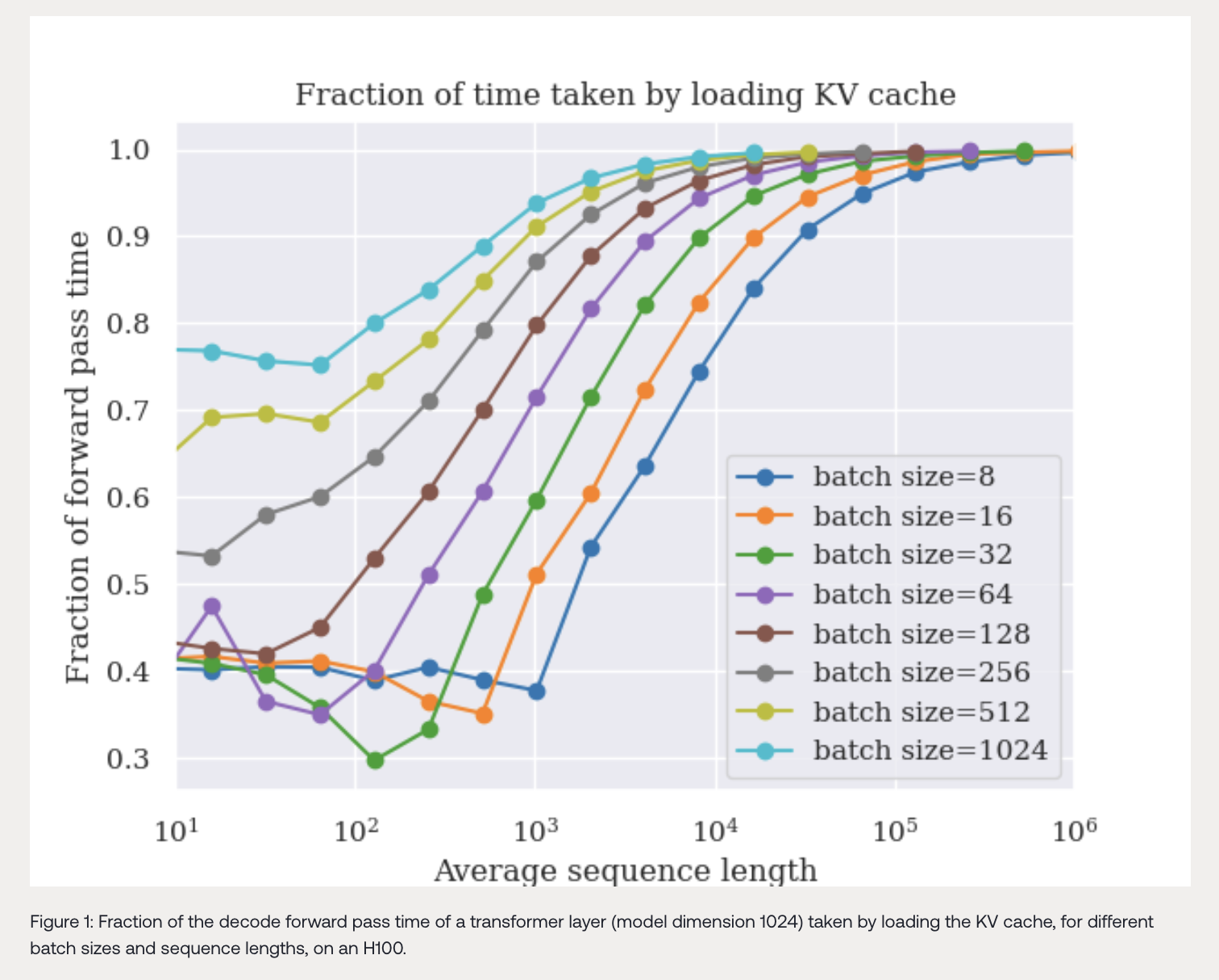

Understanding the balance between memory and compute requirements during decoding is crucial. Together AI demonstrates that, at large batch sizes and long context lengths, memory access, not computation, becomes the bottleneck for model performance.

Empirical Results

Empirical analysis validates that speculative decoding can substantially improve performance, achieving up to a 2x speedup for certain models on 8 A100 GPUs. Larger batch sizes make speculative decoding more effective, offering new possibilities for high-throughput, large-scale LLM deployments.

Conclusion

Together AI’s research reshapes the understanding of how LLMs can be optimized for real-world, large-scale applications. With innovations like MagicDec and Adaptive Sequoia Trees, speculative decoding is poised to become a key technique for improving LLM performance in long-context scenarios.

Sources

AI Solutions for Business Evolution

If you want to evolve your company with AI, stay competitive, and optimize high-throughput long-context inference, consider leveraging Together AI’s research on speculative decoding. Discover how AI can redefine your way of work through automation opportunities, KPI definition, AI solution selection, and gradual implementation.

For AI KPI management advice, connect with us at hello@itinai.com. For continuous insights into leveraging AI, stay tuned on our Telegram channel or Twitter.

Discover how AI can redefine your sales processes and customer engagement. Explore solutions at itinai.com.