Practical Solutions for Efficient Deployment of Large-Scale Transformer Models

Challenges in Deploying Large Transformer Models

Scaling Transformer-based models to over 100 billion parameters has led to groundbreaking results in natural language processing. However, deploying them efficiently poses challenges due to the sequential nature of generative inference, necessitating meticulous parallel layouts and memory optimizations.

Google’s Research on Efficient Generative Inference

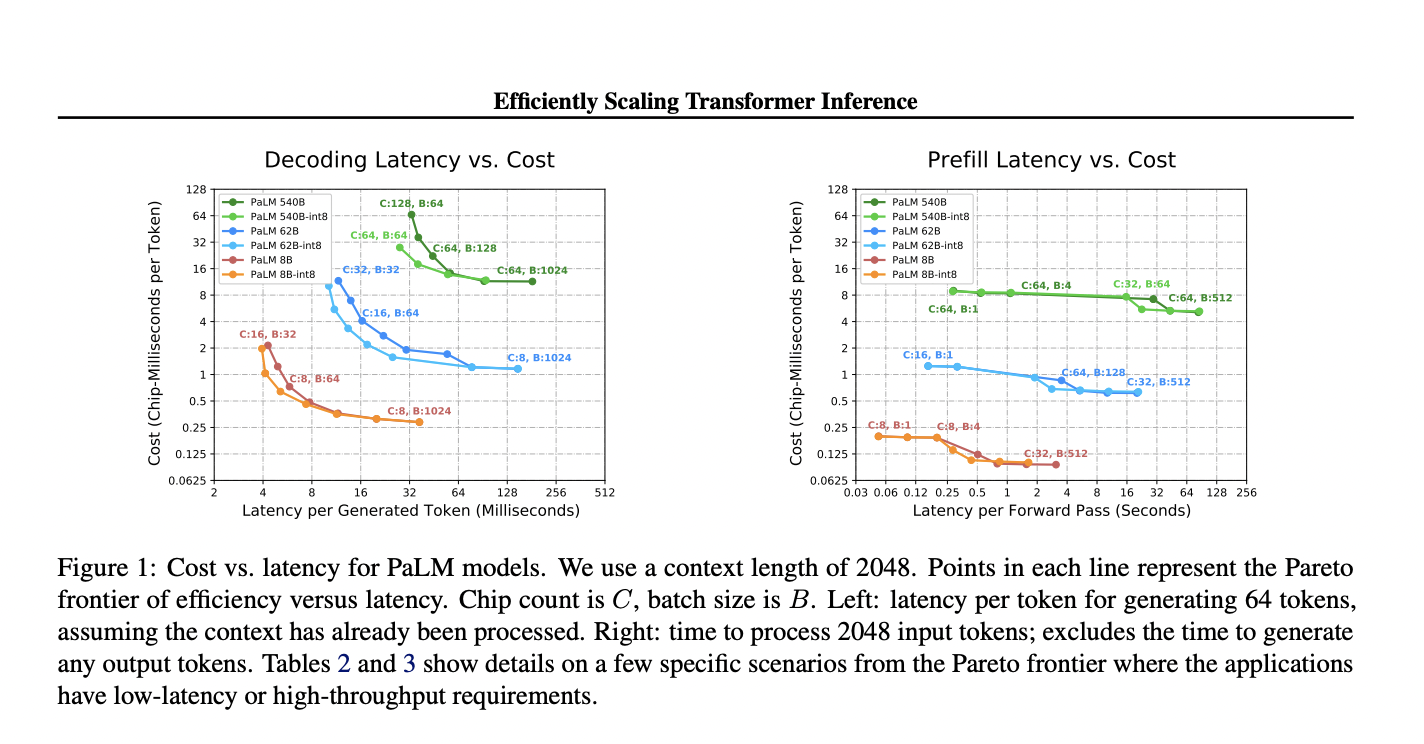

Google researchers investigated efficient generative inference for large Transformer models, focusing on tight latency targets and long sequence lengths. They achieved superior latency and Model FLOPS Utilization (MFU) tradeoffs for 500B+ parameter models, supporting practical applications in chatbots and high-throughput offline inference.

Strategies for Efficient Inference

Prior works on efficient partitioning for training large models include NeMo Megatron, GSPMD, and Alpa, while techniques like distillation, pruning, and quantization are incorporated for improving inference efficiency.

Optimizing Partitioning Layouts for Balancing Efficiency and Latency

The study demonstrated that optimizing partitioning layouts based on batch size and phase (prefill vs. generation) is crucial for balancing efficiency and latency.

Revolutionizing Various Domains with Large Transformer Models

Large Transformer models are revolutionizing various domains, and this study explores practical partitioning methods to meet stringent latency demands, especially for 500B+ parameter models.

Evolve Your Company with AI

AI Implementation Strategies

Discover how AI can redefine your way of work and redefine your sales processes and customer engagement. Identify automation opportunities, define KPIs, select an AI solution, and implement gradually for optimal results.

AI KPI Management and Continuous Insights

For AI KPI management advice and continuous insights into leveraging AI, connect with us at hello@itinai.com and stay tuned on our Telegram t.me/itinainews or Twitter @itinaicom.

Discover AI Solutions

Discover how AI can redefine your sales processes and customer engagement. Explore solutions at itinai.com.