Practical Solutions for Language Model Adaptation in AI

Enhancing Multilingual Capabilities

Language model adaptation is crucial for enabling large pre-trained language models to understand and generate text in multiple languages, essential for global AI applications.

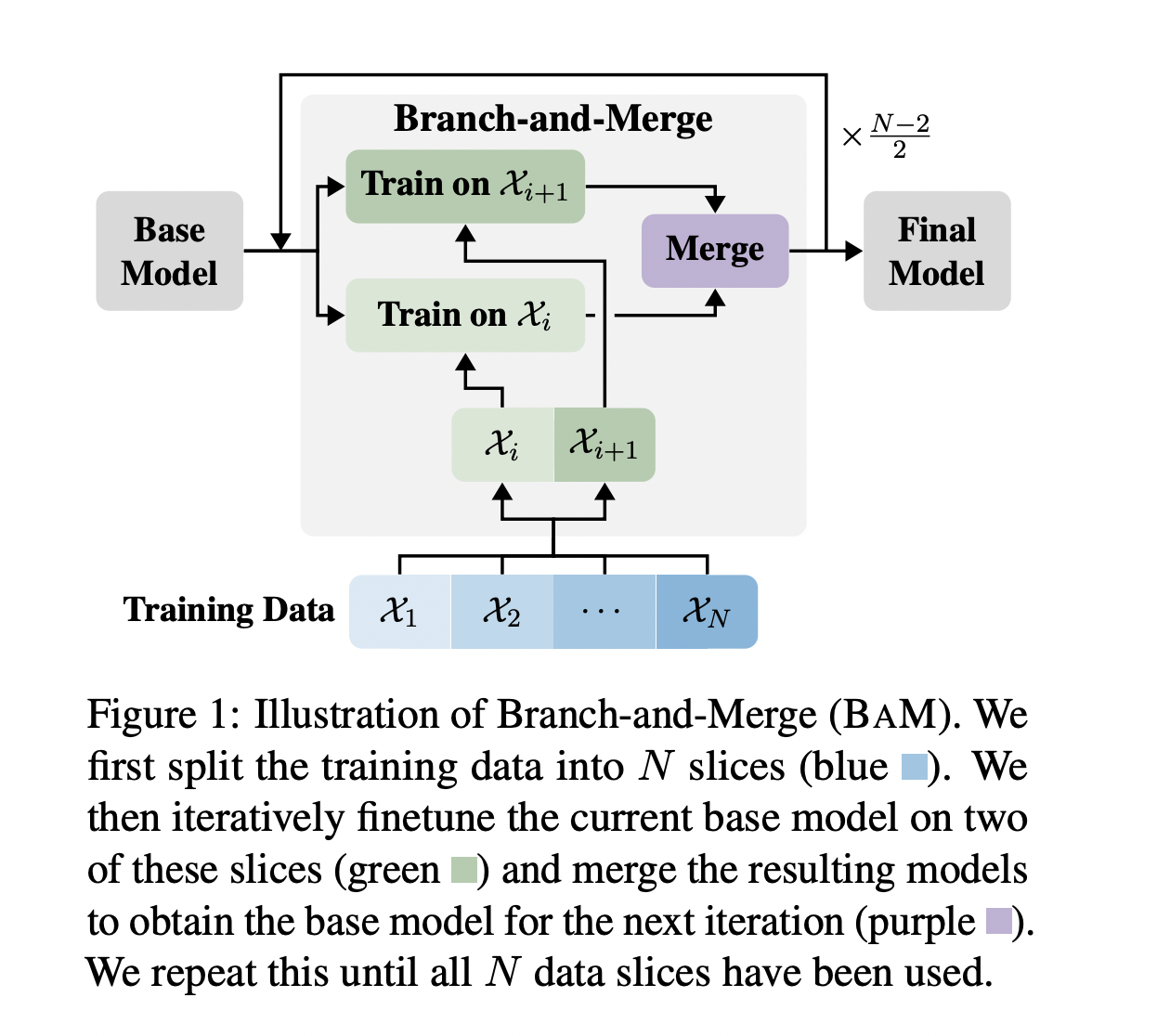

Challenges such as catastrophic forgetting can be addressed through innovative methods like Branch-and-Merge (BAM), which reduces forgetting while maintaining learning efficiency.

BAM has been shown to consistently improve benchmark performance in target and source languages compared to standard training methods.

Value of BAM Method

BAM significantly reduces forgetting while matching or improving target domain performance compared to standard pretraining and fine-tuning methods.

It offers a robust solution for catastrophic forgetting in language model adaptation, ensuring minimal yet effective weight changes to preserve the model’s capabilities in the original language while enhancing its performance in the target language.

Impact on AI Applications

BAM provides a more efficient way to adapt large language models to diverse linguistic environments, benefiting practitioners working on multilingual AI applications.

It is a valuable method for continuous pretraining and instruction tuning in alphabet- and non-alphabet-sharing languages.

Evolve Your Company with AI

Discover how AI can redefine your way of work and redefine your sales processes and customer engagement.

Identify Automation Opportunities, Define KPIs, Select an AI Solution, and Implement Gradually to leverage AI for your advantage.

Connect with us at hello@itinai.com for AI KPI management advice and continuous insights into leveraging AI.

For more information on AI solutions and to explore how AI can redefine your sales processes and customer engagement, visit itinai.com.