Optimizing Large Language Models (LLMs) on CPUs: Techniques for Enhanced Inference and Efficiency

Large Language Models (LLMs) based on the Transformer architecture have made significant technological advancements, particularly in understanding and generating human-like writing for various AI applications.

However, implementing these models in low-resource contexts presents challenges, especially when access to GPU hardware resources is limited. In such cases, CPU-based alternatives become crucial for cost-effective and efficient solutions.

Practical Solutions and Value:

A recent research has introduced an approach to enhance the inference performance of LLMs on CPUs by reducing the KV cache size without compromising accuracy. This optimization is essential for ensuring LLMs operate effectively with limited resources.

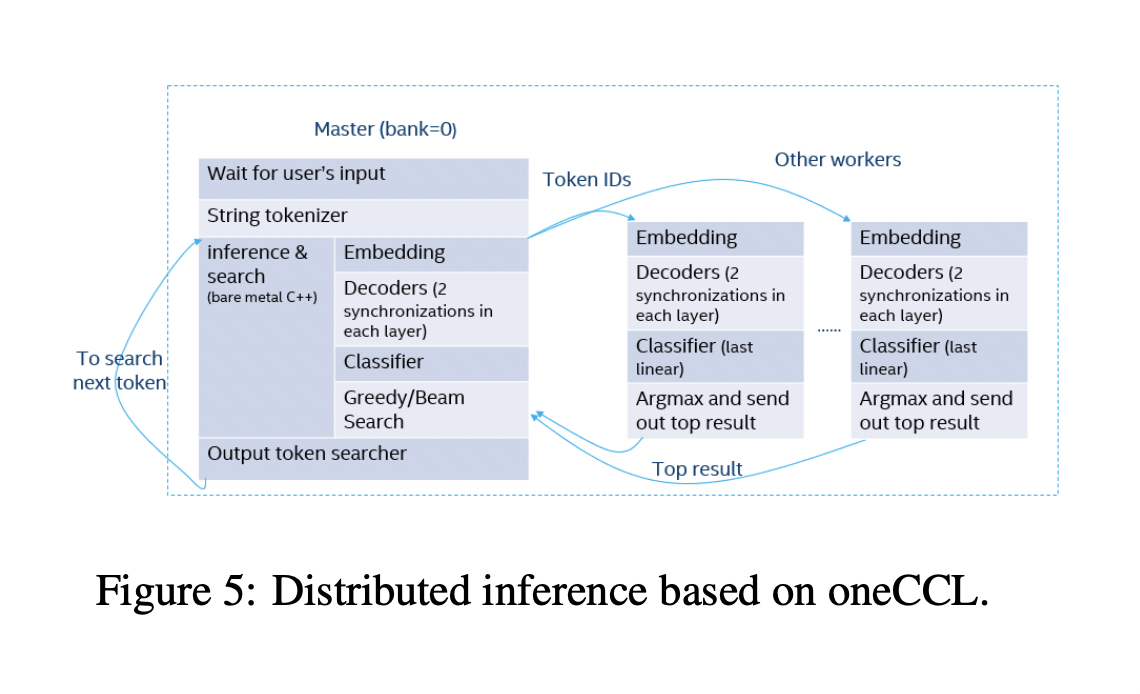

Additionally, a technique for distributed inference optimization using the oneAPI Collective Communications Library has been proposed. This method significantly improves the scalability and performance of LLMs by enabling effective communication and processing among multiple CPUs.

The team has also provided unique LLM optimization methods on CPUs, such as SlimAttention, compatible with popular models and featuring distinct optimizations for LLM procedures and layers.

By implementing these optimizations, the goal is to accelerate LLMs on CPUs, making them more affordable and accessible for deployment in low-resource settings.

For more details, you can check out the Paper and GitHub.

Stay updated with the latest AI advancements by following us on Twitter and joining our Telegram Channel and LinkedIn Group.

AI Solutions for Business Transformation

If you want to evolve your company with AI and stay competitive, consider leveraging the techniques for optimizing Large Language Models (LLMs) on CPUs for enhanced inference and efficiency.

Discover how AI can redefine your way of work by identifying automation opportunities, defining KPIs, selecting AI solutions, and implementing them gradually. Connect with us at hello@itinai.com for AI KPI management advice and continuous insights into leveraging AI.

Explore how AI can redefine your sales processes and customer engagement by visiting itinai.com.