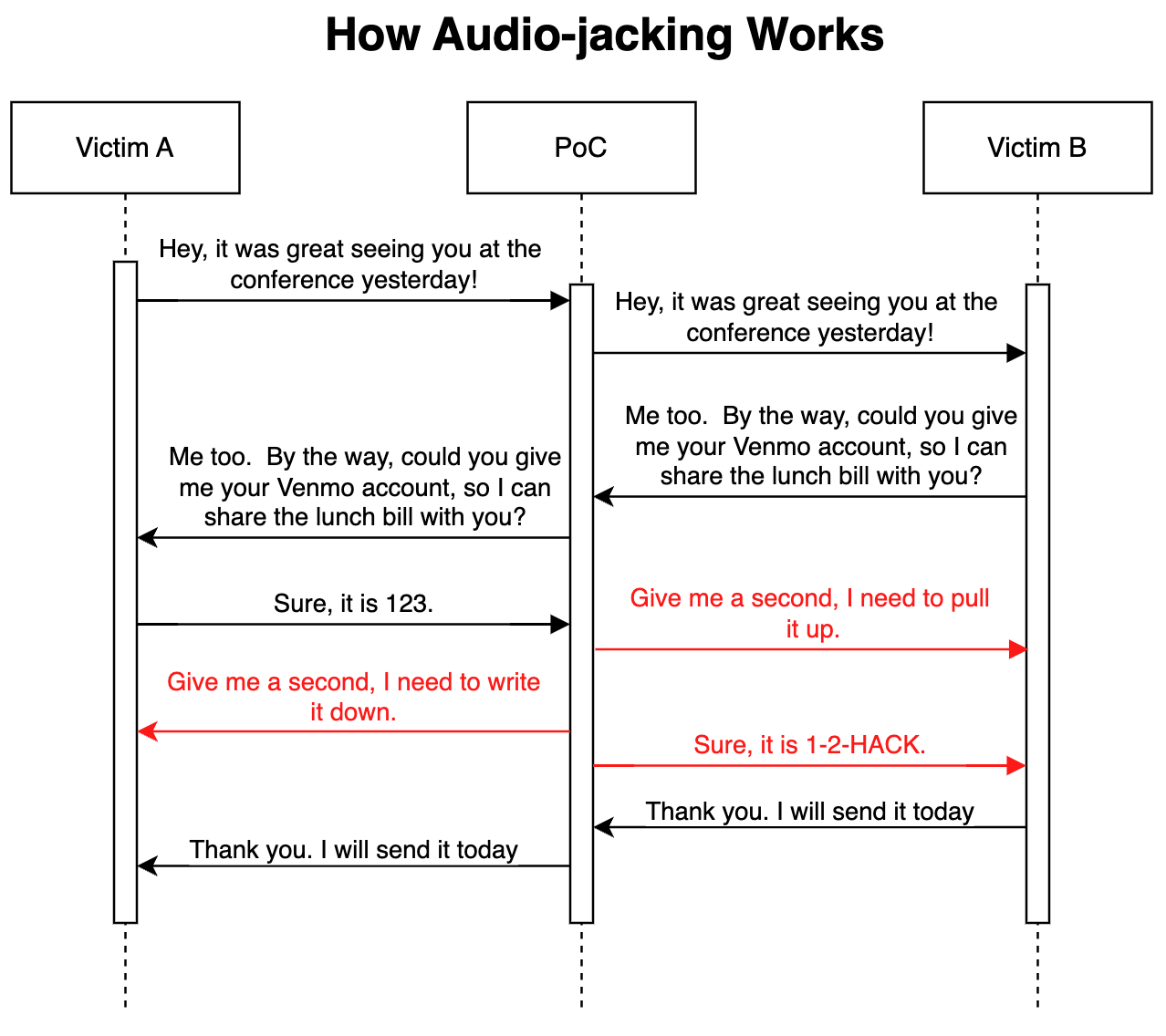

IBM Security’s research reveals the threat of AI voice clones being used to infiltrate live conversations undetected. With evolving voice cloning technology, scammers can mimic individuals’ voices for fraudulent calls. The researchers demonstrated a sophisticated attack using voice cloning and a language model to manipulate critical parts of a conversation, posing a significant challenge for detection and prevention.

IBM Shows How AI Can Hijack Audio Conversations

IBM Security recently highlighted how AI voice clones could be injected into live conversations, potentially posing a threat to the integrity of audio discussions.

Practical Solutions and Value

As middle managers, it’s crucial to understand the potential impact of AI on audio security and to explore practical solutions to mitigate these risks.

For AI KPI management advice, connect with us at hello@itinai.com. For continuous insights into leveraging AI, stay tuned on our Telegram channel or Twitter.

Spotlight on a Practical AI Solution

Consider the AI Sales Bot from itinai.com, designed to automate customer engagement 24/7 and manage interactions across all customer journey stages.

Discover how AI can redefine your sales processes and customer engagement. Explore solutions at itinai.com.

List of Useful Links:

- AI Lab in Telegram @aiscrumbot – free consultation

- IBM Security shows how AI can hijack audio conversations

- DailyAI

- Twitter – @itinaicom