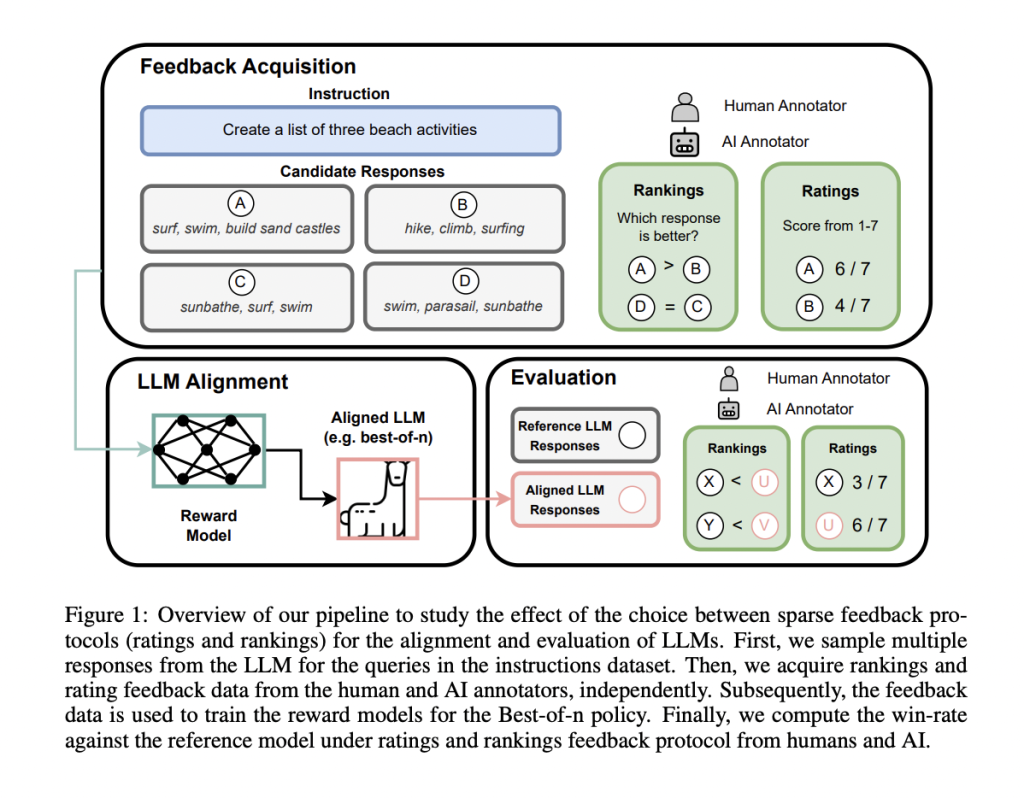

The study focuses on the impact of feedback protocols on improving alignment of large language models (LLMs) with human values. It explores the challenges in feedback acquisition, particularly comparing ratings and rankings protocols, and highlights the inconsistency issues. The research emphasizes the significant influence of feedback acquisition on various stages of the alignment pipeline, stressing the need for meticulous data curation within sparse feedback protocols. The paper also discusses the implications for model evaluation and suggests exploring richer forms of feedback for improved alignment.

“`html

Decoding the Impact of Feedback Protocols on Large Language Model Alignment: Insights from Ratings vs. Rankings

Introduction

Aligning large language models (LLMs) with human values is crucial for developing next-generation text-based assistants. The alignment process involves feedback acquisition, alignment algorithms, and model evaluation. This study delves into the nuances of feedback acquisition, comparing ratings and rankings protocols and shedding light on a significant consistency challenge.

Understanding Feedback Protocols: Ratings vs. Rankings

Ratings involve assigning an absolute value to a response using a predefined scale, while rankings require annotators to select their preferred response from a pair. This study analyzes the impact of these feedback protocols on LLM alignment, revealing consistency issues in both human and AI feedback.

Feedback Data Acquisition

The study uses diverse instructions to collect feedback and leverages GPT-3.5-Turbo for large-scale ratings and rankings feedback data collection. Agreement analysis shows reasonable alignment rates between human and AI feedback.

Impact on Alignment and Model Evaluation

The study trains reward models based on ratings and rankings feedback and assesses Best-of-n policies. Evaluation reveals that Best-of-n policies, especially with rankings feedback, outperform the base LLM and demonstrate improvement in alignment.

Conclusion

The study underscores the importance of meticulous data curation within sparse feedback protocols and highlights the potential repercussions of feedback protocol choices on evaluation outcomes. Future research may delve into the cognitive aspects of the identified consistency problem and explore richer forms of feedback for improved alignment in diverse application domains.

AI Solution for Middle Managers

Discover how AI can redefine your company’s way of work and identify automation opportunities, define KPIs, select AI solutions, and implement gradually. Connect with us at hello@itinai.com for AI KPI management advice and stay tuned on our Telegram and Twitter for continuous insights into leveraging AI.

Spotlight on a Practical AI Solution

Consider the AI Sales Bot from itinai.com/aisalesbot designed to automate customer engagement 24/7 and manage interactions across all customer journey stages.

“`

List of Useful Links:

- AI Lab in Telegram @aiscrumbot – free consultation

- Decoding the Impact of Feedback Protocols on Large Language Model Alignment: Insights from Ratings vs. Rankings

- MarkTechPost

- Twitter – @itinaicom