Genetic algorithms are highlighted as an efficient tool for feature selection in large datasets, showcasing how it can be beneficial in minimizing the objective function via population-based evolution and selection. A comparison with other methods is provided, indicating the potential and computational demands of genetic algorithms. For more in-depth details, the full article can be referenced.

“`html

Efficient Feature Selection via Genetic Algorithms

Using evolutionary algorithms for fast feature selection with large datasets

This is the final part of a two-part series about feature selection. Part 1 will be linked here when it’s published.

Brief recap: when fitting a model to a dataset, you may want to select a subset of the features (as opposed to using all features), for various reasons. But even if you have a clear objective function to search for the best combination of features, the search may take a long time if the number of features N is very large. Finding the best combination is not always easy. Brute-force searching generally does not work beyond several dozens of features. Heuristic algorithms are needed to perform a more efficient search.

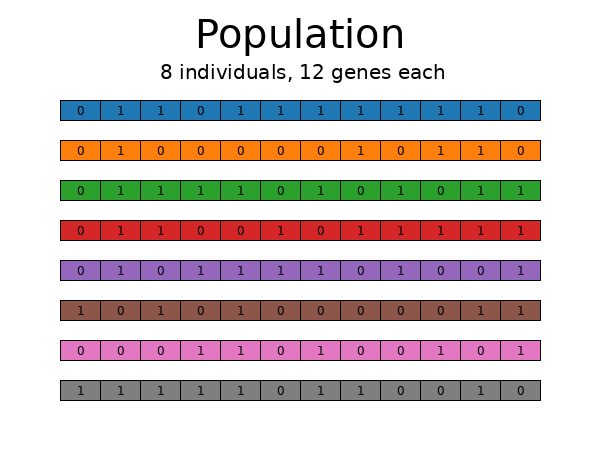

If you have N features, what you’re looking for is an N-length vector [1, 1, 0, 0, 0, 1, …] with values from {0, 1}. Each vector component corresponds to a feature. 0 means the feature is rejected, 1 means the feature is selected. You need to find the vector that minimizes the cost / objective function you’re using.

In this article, we will look at another evolutionary technique: genetic algorithms. The context (dataset, model, objective) remains the same.

GA — Genetic Algorithms

Genetic algorithms are inspired by biological evolution and natural selection. In nature, living beings are (loosely speaking) selected for the genes (traits) that facilitate survival and reproductive success, in the context of the environment where they live.

Here’s GA in a nutshell. Start by generating a population of individuals (vectors), each vector of length N. The vector component values (genes) are randomly chosen from {0, 1}. After the population is created, evaluate each individual via the objective function. Now perform selection: keep the individuals with the best objective values, and discard those with the worst values. Once the best individuals have been selected, and the less fit ones have been discarded, it’s time to introduce variation in the gene pool via two techniques: crossover and mutation.

After all that, the algorithm loops back: the individuals are again evaluated via the objective function, selection occurs, then crossover, mutation, etc. Various stopping criteria can be used: the loop may break if the objective function stops improving for some number of generations. Or you may set a hard stop for the total number of generations evaluated. Regardless, the individuals with the best objective values should be considered to be the “solutions” to the problem.

GA has several hyperparameters you can tune: population size, mutation probabilities, crossover probability, selection strategies, etc.

GA for feature selection, in code

Here’s a simplified GA code that can be used for feature selection. It uses the deap library, which is very powerful, but the learning curve may be steep. This simple version, however, should be clear enough.

The code creates the objects that define an individual and the whole population, along with the strategies used for evaluation (objective function), crossover / mating, mutation, and selection. It starts with a population of 300 individuals, and then calls eaSimple() (a canned sequence of crossover, mutation, selection) which runs for only 10 generations, for simplicity.

This simple code is easy to understand, but inefficient. Check the notebook in the repository for a more complex version of the GA code, which I am not going to quote here. However, running the more complex, optimized code from the notebook for 1000 generations produces these results:

Comparison between methods

We’ve tried three different techniques: SFS, CMA-ES, and GA. How do they compare in terms of the best objective found, and the time it took to find it?

These tests were performed on an AMD Ryzen 7 5800X3D (8/16 cores) machine, running Ubuntu 22.04, and Python 3.11.7. SFS and GA are running the objective function via a multiprocessing pool with 16 workers. CMA-ES is single-process — running it multi-process did not seem to provide significant improvements, but I’m sure that could change if more work is dedicated to making the algorithm parallel.

These are the run times. For SFS it’s the total run time. For CMA-ES and GA it’s the time to the best solution. Less is better.

SFS: 44.850 sec

GA: 157.604 sec

CMA-ES: 46.912 sec

The number of times the objective function was invoked:

SFS: 22791

GA: 600525

CMA-ES: 20000

The best values found for the objective function — less is better:

SFS: 33708.9860

GA: 33705.5696

CMA-ES: 33703.0705

CMA-ES, running in a single process, found the best objective function of all. Its run time was on par with SFS. It only invoked the objective function 20k times, the lowest of all methods. And it could probably be improved even more.

GA was able to beat SFS at the objective function, running the objective function on as many CPU cores as were available, but it’s by far the slowest. It invoked the objective function more than an order of magnitude more times than the other methods. Further hyperparameter optimizations may improve the outcome.

SFS is quick (running on all CPU cores), but its performance is modest. It’s also the simplest algorithm by far.

If you just want a quick estimate of the best feature set, using a simple algorithm, SFS is not too bad.

OTOH, if you want the absolute best objective value, CMA-ES seems to be the top choice.

Discover how AI can redefine your way of work. Identify Automation Opportunities: Locate key customer interaction points that can benefit from AI. Define KPIs: Ensure your AI endeavors have measurable impacts on business outcomes. Select an AI Solution: Choose tools that align with your needs and provide customization. Implement Gradually: Start with a pilot, gather data, and expand AI usage judiciously.

For AI KPI management advice, connect with us at hello@itinai.com. And for continuous insights into leveraging AI, stay tuned on our Telegram or Twitter.

Spotlight on a Practical AI Solution:

Consider the AI Sales Bot from itinai.com/aisalesbot designed to automate customer engagement 24/7 and manage interactions across all customer journey stages.

Discover how AI can redefine your sales processes and customer engagement. Explore solutions at itinai.com.

“`

List of Useful Links:

- AI Lab in Telegram @aiscrumbot – free consultation

- Efficient feature selection via genetic algorithms

- Towards Data Science – Medium

- Twitter – @itinaicom