The recent exponential advances in natural language processing have generated excitement for potential human-level intelligence. However, concerns surround the fundamental blindspots and limitations of neural approaches, particularly in systematic reasoning tasks. To combat these issues, integrating structured knowledge representations such as knowledge graphs with large language models (LLMs) shows promise in mitigating these deficits, facilitating context-aware, disciplined reasoning. This symbiotic integration of innate neural capacities with structured compositionality and causal constraints promises more robust and trustworthy systems that reason fluidly.

“`html

The Reasoning Gap

Language Model Agents Suffer from Compositional Generalization in Web Automation

Let’s Verify Step by Step

Why think step by step? Reasoning emerges from the locality of experience

Can LLM Learn to Reason About Cause and Effect?

An emerging perspective explains many of the logical contradictions and lack of systematic generalization exhibited by LLMs as arising from a fundamental “reasoning gap” — the inability to spontaneously chain facts and inferences through intermediate reasoning steps.

Humans possess an intuitive capacity to make conceptual leaps using structured background knowledge and directing attention towards relevant pathways. We leverage existing schema and causal models linking concepts to stitch coherent narratives. For example, humans can reliably determine the climate in a country’s capital by using the capital relation to connect isolated facts — that Paris is the capital of France, and France has a temperate climate.

However, neural networks like LLMs accumulate only statistical associations between terms that co-occur frequently together in text corpora. Their knowledge remains implicit and unstructured. With no appreciation of higher order semantic relationships between concepts, they struggle to bridge concepts never seen directly linked during pre-training. Questions requiring reasoning through intermediate steps reveal this brittleness.

New benchmark tests specifically assess systematicity — whether models can combine known building blocks in novel ways. While LLMs achieve high accuracy when concepts they must relate already co-occur in training data, performance drops significantly when concepts appear separately despite influencing each other. The models fail to chain together their isolated statistical knowledge, unlike humans who can link concepts through indirect relationships.

This manifests empirically in LLMs’ inability to provide consistent logical narratives when queried through incremental prompt elaborations. Slight perturbations to explore new directions trigger unpredictable model failures as learned statistical associations break down. Without structured representations or programmatic operators, LLMs cannot sustain coherent, goal-oriented reasoning chains — revealing deficits in compositional generalization.

The “reasoning gap” helps explain counterintuitive LLM behavior. Their knowledge, encoded across billions of parameters through deep learning from surface statistical patterns alone, lacks frameworks for disciplined, interpretable logical reasoning that humans innately employ for conceptual consistency and exploration. This illuminates priorities for hybrid neurosymbolic approaches.

Enhancing Reasoning with Knowledge Graphs

Vector Search Is Not All You Need

Knowledge Graph Embeddings as a Bridge between Symbolic and Subsymbolic AI

Augmenting Large Language Models with Hybrid Knowledge Architectures

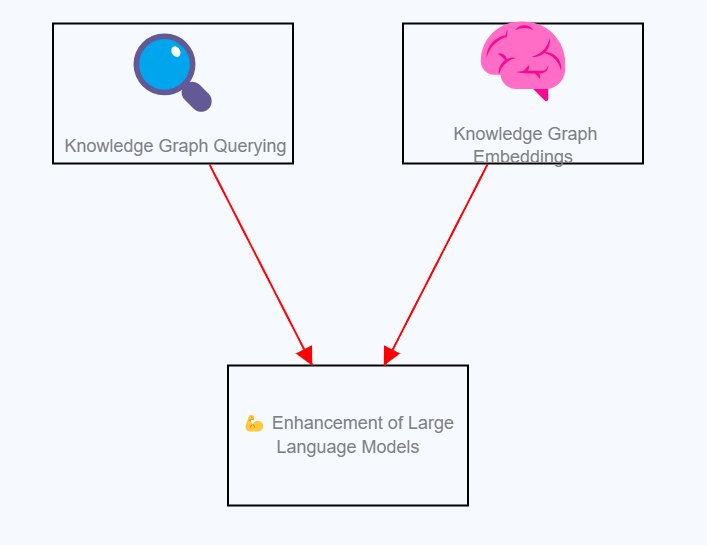

Knowledge graphs offer a promising method for overcoming the “reasoning gap” plaguing modern large language models (LLMs). By explicitly modeling concepts as nodes and relationships as edges, knowledge graphs provide structured symbolic representations that can augment the flexible statistical knowledge within LLMs.

Establishing explanatory connections between concepts empowers more systematic, interpretable reasoning across distant domains. LLMs struggle to link disparate concepts purely through learned data patterns. But knowledge graphs can effectively relate concepts not directly co-occurring in text corpora by providing relevant intermediate nodes and relationships. This scaffolding bridges gaps in statistical knowledge, enabling logical chaining.

Such knowledge graphs also increase transparency and trust in LLM-based inference. Requiring models to display full reasoning chains over explicit graph relations mitigates risks from unprincipled statistical hallucinations. Exposing the graph paths makes statistical outputs grounded in validated connections.

Constructing clean interfaces between innately statistical LLMs and structured causal representations shows promise for overcoming today’s brittleness. Combining neural knowledge breadth with external knowledge depth can nurture the development of AI systems that learn and reason both flexibly and systematically.

Knowledge Graph Querying and Graph Algorithms

Knowledge graph querying and graph algorithms are powerful tools for extracting and analyzing complex relationships from large datasets. Here’s how they work and what they can achieve:

Knowledge Graph Querying:

Knowledge graphs organize information as entities (like books, people, or concepts) and relationships (like authorship, kinship, or thematic connection). Querying languages like SPARQL and Cypher enable the formulation of queries to extract specific information from these graphs. For example, the query mentioned in your example finds books related to “Artificial Intelligence” by matching book nodes connected to relevant topic nodes.

Graph Algorithms:

Beyond querying, graph algorithms can analyze these structures in more profound ways. Some typical graph algorithms include:

- Pathfinding Algorithms (e.g., Dijkstra’s, A*): Find the shortest path between two nodes, useful in route planning and network analysis.

- Community Detection Algorithms (e.g., Louvain Method): Identify clusters or communities within graphs, helping in social network analysis and market segmentation.

- Centrality Measures (e.g., PageRank, Betweenness Centrality): Determine the importance of different nodes in a network, applicable in analyzing influence in social networks or key infrastructure in transportation networks.

- Recommendation Systems: By analyzing user-item graphs, these systems can make personalized recommendations based on past interactions.

Large Language Models (LLMs) can generate queries for knowledge graphs based on natural language input. While they excel in understanding and generating human-like text, their statistical nature means they’re less adept at structured logical reasoning. Therefore, pairing them with knowledge graphs and structured querying interfaces can leverage the strengths of both: the LLM for understanding and contextualizing user input and the knowledge graph for precise, logical data retrieval.

Incorporating graph algorithms into the mix can further enhance this synergy. For instance, an LLM could suggest a community detection algorithm on a social network graph to identify influential figures within a specific interest group. However, the challenge lies in integrating these disparate systems in a way that is both efficient and interpretable.

Knowledge Graph Embeddings

Knowledge graph embeddings encode entities and relations as dense vector representations. These vectors can be dynamically integrated within LLMs using fused models.

For example, a cross-attention mechanism can contextualize language model token embeddings by matching them against retrieved graph embeddings. This injects relevant external knowledge.

Mathematically fusing these complementary vectors grounds the model, while allowing gradient flows across both components. The LLM inherits relational patterns, improving reasoning.

So both querying and embeddings provide mechanisms for connecting structured knowledge graphs with the statistical capacities of LLMs. This facilitates interpretable, contextual responses informed by curated facts.

Complementary Approaches

First retrieving relevant knowledge graph embeddings for a query before querying the full knowledge graph. This two-step approach allows efficient focus of graphical operations:

Step 1: Vector Embedding Retrieval

Given a natural language query, relevant knowledge graph embeddings can be quickly retrieved using approximate nearest neighbor search over indexed vectors.

For example, using a query like “Which books discuss artificial intelligence”, vector search would identify embeddings of the Book, Topic, and AI Concept entities.

This focuses the search without needing to scan the entire graph, improving latency. The embeddings supply useful query expansion signals for the next step.

Step 2: Graph Query/Algorithm Execution

The selected entity embeddings suggest useful entry points and relationships for structured graphical queries and algorithms.

In our example, the matches for Book, Topic, and AI Concept cues exploration of BOOK-TOPIC and TOPIC-CONCEPT connections.

Executing a query like:

MATCH (b:Book)-[:DISCUSSES]->(t:Topic)-[:OF_TYPE]->(c:Concept) WHERE c.name = “Artificial Intelligence” RETURN b

This traverses booked linked to AI topics to produce relevant results.

Overall, the high-level flow is:

Use vector search to identify useful symbolic handles

Execute graph algorithms seeded by these handles

This tight coupling connects the strength of similarity search with multi-hop reasoning.

The key benefit is focusing complex graph algorithms using fast initial embedding matches. This improves latency and relevance by avoiding exhaustive graph scans for each query. The combination enables scalable, efficient semantic search and reasoning over vast knowledge.

Parallel Querying of Multiple Graphs or the same Graph

The key idea behind using multiple knowledge graphs in parallel is to provide the language model with a broader scope of structured knowledge to draw from during the reasoning process. Let me expand on the rationale:

Knowledge Breadth: No single knowledge graph can encapsulate all of humanity’s accrued knowledge across every domain. By querying multiple knowledge graphs in parallel, we maximize the factual information available for the language model to leverage.

Reasoning Diversity: Different knowledge graphs may model domains using different ontologies, rules, constraints etc. This diversity of knowledge representation exposes the language model to a wider array of reasoning patterns to learn.

Efficiency: Querying knowledge graphs in parallel allows retrieving relevant information simultaneously. This improves latency compared to sequential queries. Parallel search allows more rapid gathering of contextual details to analyze.

Robustness: Having multiple knowledge sources provides redundancy in cases where a particular graph is unavailable or lacks information on a specific reasoning chain.

Transfer Learning: Being exposed to a multitude of reasoning approaches provides more transferable learning examples for the language model. This enhances few-shot adaptation abilities.

So in summary, orchestrating a chorus of knowledge graphs provides breadth and diversity of grounded knowledge to overcome limitations of individual knowledge bases. Parallel retrieval improves efficiency and robustness. Transfer learning across diverse reasoning patterns also accelerates language model adaption. This combination aims to scale structured knowledge injection towards more human-like versatile understanding.

Large Language Models as a fluid semantic glue between structured modules

While vector search and knowledge graphs provide structured symbolic representations, large language models (LLMs) like GPT-3 offer unstructured yet adaptive semantic knowledge. LLMs have demonstrated remarkable few-shot learning abilities, quickly adapting to new domains with only a handful of examples.

This makes LLMs well-suited to act as a fluid semantic glue between structured modules — ingesting the symbolic knowledge, interpreting instructions, handling edge cases through generalization, and producing contextual outputs. They leverage their vast parametric knowledge to rapidly integrate with external programs and data representations.

We can thus conceive of LLMs as a dynamic, ever-optimizing semantic layer. They ingest forms of structured knowledge and adapt on the fly based on new inputs and querying contexts. Rather than replacing symbolic approaches, LLMs amplify them through rapid binding and contextual response generation. This fluid integration saves the effort of manually handling all symbol grounding and edge cases explicitly.

Leveraging the innate capacities of LLMs for semantic generalization allows structured programs to focus on providing logical constraints and clean interfaces. The LLM then handles inconsistencies and gaps through adaptive few-shot learning. This symbiotic approach underscores architecting AI systems with distinct reasoning faculties suited for their inherent strengths.

Structured Knowledge as AI’s Bedrock

The exponential hype around artificial intelligence risks organizations pursuing short-sighted scripts promising quick returns. But meaningful progress requires patient cultivation of high-quality knowledge foundations. This manifests in structured knowledge graphs methodically encoding human expertise as networked representations over time.

Curating clean abstractions of complex domains as interconnected entities, constraints and rules is no trivial investment. It demands deliberate ontology engineering, disciplined data governance and iterative enhancement. The incremental nature can frustrate business leaders accustomed to rapid software cycles.

However, structured knowledge is AI’s missing pillar — curbing unbridled statistical models through grounding signals. Knowledge graphs provide the scaffolding for injectable domain knowledge, while enabling transparent querying and analysis. Their composable nature also allows interoperating with diverse systems.

All this makes a compelling case for enterprise knowledge graphs as strategic assets. Much like databases evolved from flexible spreadsheets, the constraints of structure ultimately multiply capability. The entities and relationships within enterprise knowledge graphs become reliable touchpoints for driving everything from conversational assistants to analytics.

In the rush to the AI frontier, it is tempting to let unconstrained models loose on processes. But as with every past wave of automation, thoughtfully encoding human knowledge to elevate machine potential remains imperative. Managed well, maintaining this structured advantage compounds over time across applications, cementing market leadership. Knowledge powers better decisions — making enterprise knowledge graphs indispensable AI foundations.

Image generated by Dall-E-3 by the Author

Spotlight on a Practical AI Solution

Consider the AI Sales Bot from itinai.com/aisalesbot designed to automate customer engagement 24/7 and manage interactions across all customer journey stages.

Discover how AI can redefine your sales processes and customer engagement. Explore solutions at itinai.com.

Connect with Us

For AI KPI management advice, connect with us at hello@itinai.com. And for continuous insights into leveraging AI, stay tuned on our Telegram or Twitter.

“`

List of Useful Links:

- AI Lab in Telegram @aiscrumbot – free consultation

- Enhanced Large Language Models as Reasoning Engines

- Towards Data Science – Medium

- Twitter – @itinaicom