Neural networks use non-linear activation functions to enable them to model and fit complex functions. The most common activation function is the rectified linear unit (ReLU), but there are others such as sigmoid, tanh, and leaky ReLU. The choice of activation function depends on the specific problem and should be experimented with to find the best one for the model.

Explaining why neural networks can learn (nearly) anything and everything

Background

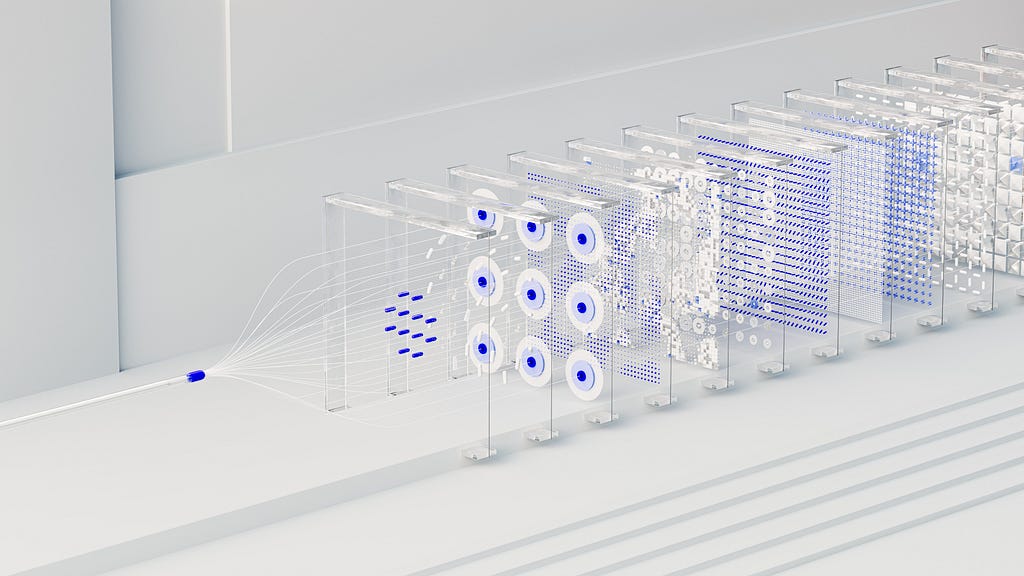

In my previous article, we introduced the multi-layer perceptron (MLP), which is just a set of stacked interconnected perceptrons. The MLP can only fit a linear classifier because of its linear activation function. So, what do we do about it?

Non-Linear Activation Functions!

To enable neural networks to model and fit complex functions, we need non-linear activation functions. These functions satisfy something called the Universal Approximation Theorem, which means that a neural network can approximate and fit any function.

Why Do We Need Non-Linearity?

Linearity means a function satisfies a specific condition. Linear functions are limited in their ability to predict complex phenomena. Many real-world problems are non-linear, so we need non-linear models to predict them. An important requirement for the activation function is that it needs to be differentiable to enable gradient descent.

Activation Functions

Let’s quickly run through some common non-linear activation functions:

- Sigmoid: Squashes inputs to an output between 0 and 1. Not commonly used in cutting-edge neural networks due to vanishing gradient problem and computational inefficiency.

- Tanh: Maps inputs to be between -1 and 1. Zero-centred but suffers from the same issues as sigmoid: vanishing gradient problem and computational inefficiency.

- ReLU: Most popular activation function. Computationally efficient and solves the vanishing gradient problem. However, it has its own issues like dead neurons and unbounded values.

- Leaky ReLU: An adjustment to ReLU with a small gradient for negative inputs. Avoids dead neuron problem and remains computationally efficient. Similar flaws as ReLU.

- Others: There are several other activation functions like Swish, GLU, and Softmax, each with its own advantages and disadvantages.

Summary & Further Thoughts

To make a neural network capable of fitting complex functions, it needs a non-linear activation function. The ReLU function is commonly used, but it’s important to experiment with various functions to find the best one for your model.

If you want to evolve your company with AI and stay competitive, consider using Activation Functions & Non-Linearity: Neural Networks 101 for your advantage. Discover how AI can redefine your way of work and identify automation opportunities, define KPIs, select an AI solution, and implement gradually. For AI KPI management advice, connect with us at hello@itinai.com. And for continuous insights into leveraging AI, stay tuned on our Telegram t.me/itinainews or Twitter @itinaicom.

Spotlight on a Practical AI Solution:

Consider the AI Sales Bot from itinai.com/aisalesbot designed to automate customer engagement 24/7 and manage interactions across all customer journey stages. Discover how AI can redefine your sales processes and customer engagement. Explore solutions at itinai.com.

List of Useful Links:

- AI Lab in Telegram @aiscrumbot – free consultation

- Activation Functions & Non-Linearity: Neural Networks 101

- Towards Data Science – Medium

- Twitter – @itinaicom